Measuring AI Performance: Are You Leveraging LLMs’ Analytic Capabilities?

Understanding how your artificial intelligence (AI) is performing requires effective analytics tools, a strong grasp of conversational design practices, and a clear sense of your business goals and your customers’ intents.

By Mike Myer, CEO and Founder, Quiq

While a lot has shifted in the automation landscape with the widespread adoption of ChatGPT and other Large Language Models (LLMs), one thing hasn’t changed. Whether you’re building a Natural Language Understanding (NLU) bot or an LLM-powered AI Assistant, you need to understand how your AI is performing.

Anyone who has spent a cursory amount of time attempting to build, deploy, measure, and optimize an automated AI Assistant knows that not all resolved conversations are created equal.

Did the customer leave because they got their issue resolved? Or did they give up because your bot was giving them the same unhelpful answer over and over?

While it might seem impossible to sift through mountains of conversational data, there are a handful of analytics tools and conversational design best practices that can go a long way to ensuring you’re measuring—and optimizing—what matters.

Let’s dive into what those tools and best practices are, starting with techniques to measure customer satisfaction (CSAT).

How to Measure CSAT and Collect Customer Feedback

Analyzing CSAT scores helps you gauge your conversational AI’s success and identify improvement areas— surveys and thumbs-up feedback mechanisms are effective techniques for measuring customer satisfaction.

Integrating short surveys at the end of conversations or providing a thumbs-up option during conversations is powerful. It allows customers to express their level of satisfaction easily and directly within the context where they are already engaged. And because you can do it conversationally, you can garner better response rates and more immediate feedback.

We’ll cover a wide range of measurement techniques below, but it’s important to not overlook the simplest technique when trying to understand if an AI Assistant answered the customer’s question: Ask them.

- Use multiple formats. For instance, CSAT, free form, 1-5 stars, was this helpful yes/no, thumbs up/thumbs down).

- Collect channel-appropriate feedback. Is your primary channel short-lived like web chat, where the customer may close their browser tab and disappear, or is it a more persistent channel, like Short Message Service (SMS), where the conversation is more asynchronous in nature? Your timing should be partially dictated by the channel of engagement.

- Have a clear conversation architecture strategy in place from the beginning, so you can collect feedback at critical moments. Whether that’s after an FAQ answer is served or a conversation has been closed, it is going to be vital to segment this feedback by customer paths or goals.

What Are Goals and How Can You Define Them?

Setting clear goals for AI performance measurement is crucial for tracking progress and evaluating success.

While it’s great when customers tell you exactly what they liked or didn’t like about their experience, it’s all but guaranteed that you’re going to get less than a 100% satisfaction rate on your customer satisfaction surveys.

This could be because your AI Assistant delivered the appropriate message, but a customer didn’t like its intent, which may be company policy. Or perhaps it correctly identified a product the customer is looking for, but that product is out of stock, and the customer is still unhappy.

On the other hand, many users never even reach a point with your AI Assistant to trigger a survey.

That’s why it’s important to be able to use different points in a customer’s journey as a means to silently measure the efficacy of your experience—whether it’s having your AI Assistant ask a certain question, collect a piece of data, or send a particular response.

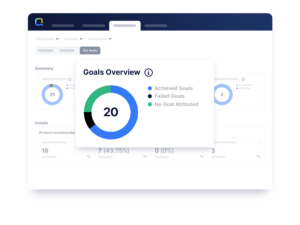

Ideally, you should be able to measure and report on key milestones in the customer journey to understand how many customers engaged with a particular part of your experience, and how many had a successful, or unsuccessful outcome.

For example, if you’ve deployed a generative AI Assistant designed to help customers track and reschedule furniture deliveries, you’d like to know how many customers attempted to track their order, how many successfully rescheduled their order, and how many never successfully entered their order details and got escalated to a live agent.

While the above might seem straightforward, at Quiq we’ve learned there is a non-trivial amount of conversational design work that goes into determining what possible outcomes constitute success or failure— not to mention how to think about what it means for a user to “attempt” something.

The tooling you’re using should be flexible enough to allow you to cover the wide range of ways you may need to track your conversations. While channel surveys add massive value, companies should also send out Customer Effort Score (CES) surveys several days after the interaction to capture the CES across all channels in the service journey.

How to Find Where Customers Are Giving Up

Your AI Assistant most likely has several jobs it’s designed to accomplish, whether that’s providing a product recommendation or helping rebook a customer’s flight.

But don’t focus exclusively on analyzing these user journeys at the expense of having a high-level understanding of how users are engaging with your AI Assistant—and what’s changing over time.

A good set of conversational AI tools should enable you to get a bird’s eye view of your experience and allow you to dive into the weeds, ideally at the individual conversation level, to understand exactly what’s going on in your experience and how customer usage and needs evolve over time.

While there are a seemingly limitless number of ways to think about analyzing your AI Assistant, there are a handful of ways that are worth considering.

Traffic

Start by analyzing traffic changes. Are you noticing more customers going down a particular path or less? Can you dig into groups of conversations at these impacted points and understand what’s changed?

Customer Intent

Often related to traffic, you can identify shifting customer intent by looking to see if customers are asking different questions than they were previously. Is your AI Assistant handling this shift well, or do you need to update the AI Assistant?

Dropoff

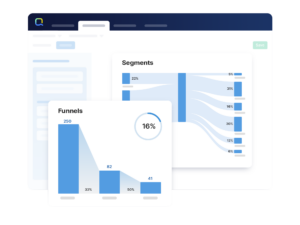

Is the completion rate lower at certain key points in your experience? Can you visualize this at a glance, with a set of funnels, or a Sankey diagram?

Sentiment

Is there a change in customer mood? Whether that’s during the conversation, or due to key facets of your experience, this is an important source of performance knowledge.

Regardless of where you look to find where customers are dropping off, it’s important to have a powerful set of analytics tools that let you visualize, measure, and drill into conversations in a manner appropriate to your team’s workflow.

What’s Different About LLMs?

While much of the above still applies to analyzing AI Assistants that are powered by LLMs, this emerging AI tech presents unique measurement opportunities.

For one, LLMs do a great job of reading and understanding. You can harness this power by developing evaluation and scoring techniques via prompting that will allow you to measure the effectiveness and helpfulness of your AI Assistant.

What if you could measure CSAT without needing to ask for it? Or what if you could identify topics over time where gaps in the knowledge base exist so that you could add to them?

These types of things are possible with the right prompting and context fed into an LLM. Here are other ways to make performance measurement easier with LLM-powered AI.

Have a Large Language Model Review LLM Conversations

Not only can LLMs power the conversation, but they can also analyze it both throughout the experience and upon its conclusion. You can use LLMs to help measure and evaluate the performance of AI Assistants. For example, what was the user’s sentiment in the conversation and how did it change from beginning to end?

Measure Sentiment Shifts

Rather than simply measuring overall sentiment, layering in sentiment shift allows you to see a more nuanced and complete picture of the AI Assistant’s conversation from the customer’s baseline to the conversation’s completion.

You can also use sentiment analysis at specific points in time to guide the users directly to a live agent if the sentiment score reaches particular levels.

Summarize Conversations

Transcripts can become long and tedious to read through. Instead, use an LLM to read and summarize a transcript before handing it off to an agent. Or simply use it to summarize a conversation for record keeping. Auto summarization saves time for agents and analysts alike.

How to Deploy and Manage Changes Safely

When you’re building an AI Assistant you need to not only consider how your answers will appear, but how they appear and change over time with new phrases people might use or updated policies and knowledge base articles.

Use Test Sets to Monitor and Improve AI Assistant Responses

Here’s a scenario: Your knowledge base article addresses how to accomplish something within your app. But then you add functionality and a corresponding knowledge base article.

You can test it out with new user questions to see how it answers, but you’re also going to want to know that the things people were asking before this change are still being answered correctly and that any overlap or contention between articles is still handled well in your generated responses.

This is where test sets come in.

You can run a set of tests where you know the appropriate answer both before and after adding this new article. Make sure the responses continue to look strong even after adding in new knowledge base information.

And the best part? You can use an LLM to automate this process.

Tell the LLM the types of questions users ask, track the responses your AI Assistant gives, measure those responses, and set baselines, then run them again after the new article is added.

Do your new responses continue to track well against the metrics? Awesome! Now you have more data for customers to interact with, you know you can answer additional questions, and you can be confident that the answers generated continue to be effective and accurate.

In addition to testing, you want to be able to monitor events as they happen over time within conversations, so you can pull out user phrases and see how customers’ behavior and language might change over time.

By recording events in your AI Assistant conversations, you can add these to test sets, build out test cases for them, and generally strengthen the robustness of your AI Assistant’s responses.

Final Thoughts

Understanding how your AI is performing requires effective analytics tools, a strong grasp of conversational design practices, and a clear sense of your business goals and your customers’ intents.

But LLMs now present additional measurement opportunities—from leveraging them to analyze conversations and evaluate performance, to measuring sentiment shifts and automating tasks like conversation summarization.

By continuously monitoring events and recording user behavior within and across conversations, you can strengthen your AI Assistant’s responses and improve its overall performance.

CEO and Founder, Quiq

Mike is the Founder and CEO of Quiq. Before founding Quiq, Mike was Chief Product Officer & VP of Engineering at Dataminr, a startup that analyzes all of the world’s tweets in real-time and detects breaking information ahead of any other source. Mike has deep expertise in customer service software, having previously built the RightNow Customer Experience solution used by many of the world’s largest consumer brands to deliver exceptional interactions.

RightNow went public in 2004 and was acquired by Oracle for $1.5B in 2011. Mike led Engineering the entire time RightNow was a standalone company and later managed a team of nearly 500 at Oracle responsible for Service Cloud. Before RightNow, Mike held various software development and architect roles at AT&T, Lucent and Bell Labs Research. Mike holds a bachelor’s and a master’s degree in Computer Science from Rutgers University.

Quiq is the Conversational Customer Experience Platform for the world’s top brands.

Learn more at Quiq.com

Go Back to All Articles. Have a story idea? Submit to [email protected].

Want to get this publication in your inbox? Subscribe here!

Generative AI is the hottest topic in CX today, and one of its most promising applications is powering GenerativeAgent. A generative AI-powered virtual agent is as capable as Tier 1 agents to increase resolution and customer satisfaction. Your customers need a virtual agent that they can speak to naturally to accomplish tasks. Now they have one.

In this session, ASAPP and PTP will deep dive into this AI-led innovation and share how they partner with clients to deploy GenerativeAgent to deliver operational improvements, increased customer satisfaction, and reduced costs. You will witness firsthand just how powerful this technology is!

You’ll gain insights into:

- Ways GenerativeAgent unlocks business value and improve CX

- Real-world examples of how the ASAPP/PTP team is deploying GenerativeAgent with a Fortune 100 client and the results they are seeing

- Practical tips to get started on your organization’s GenerativeAgent journey, including best practices and process recommendations

The world is full of famous duos (Holmes & Watson, Batman & Robin, Han & Chewie), showing us that working together is better than going alone. The same can be said for the complex and ever-changing world of customer service. How can you be expected to stay up to date with the latest CX best practices when Generative AI is changing the game, sometimes by the hour? You need an experienced and innovative partner to navigate this ever-changing world and empower you to create craveable customer experiences.

By attending this session, you will learn:

- How Progressive identified the right partner to entrust with their customers

- How Progressive saves over $20M in annual operating expenses

- How Progressive achieves 97% intent accuracy to empower customers”

Mosaicx is a cloud-based solution that uses conversational AI, machine learning and natural language processing technologies to automate interactions with customers and employees. Its IVA technology delivers fast, easy, personalized service through industry-leading voice recognition and digital messaging capabilities, creating positive interactions that drive improved customer and employee satisfaction. Mosaicx makes conversational AI surprisingly simple.

AI in customer service is here. While some brands are still considering how it fits into their CX strategy, others are already hard at work implementing it in their day-to-day to handle volume, streamline processes, and grow customer loyalty.

In this panel led by Gladly, hear practical strategies and personal experiences from leaders on how they’ve applied AI across their support teams and found success.

They’ll discuss how to use AI to:

- Manage volume with intelligent solutions that meet every business need, from powerful self-service features to effective automation tools for agents.

- Scale and meet changing contact center needs, such as seasonal spikes in call volume, without increasing costs.

- Empower your agents to focus on high-value interactions — such as cross-selling and upselling — that drive revenue and customer satisfaction.

In an era where customer expectations continually evolve, transforming your organization to meet and exceed these demands is imperative. Join keynote speaker Kimberly Masters, Senior Group Director at Sam’s Club, as she unveils the journey of transformation that led to significant improvements in customer satisfaction and operational efficiency.

Kimberly emphasizes that a successful transformation starts and ends with an obsessive focus on enhancing experiences for members, customers, and associates. Achieving this requires a unified vision and measures of success across all levels, both internally and outsourced. Embracing boldness and the courage to challenge the status quo, coupled with integrating process, technology, and resources, is crucial. Timing is everything, especially concerning GenAI, and is essential for getting the experience right.

Her keynote will provide invaluable insights and actionable strategies for organizations looking to embark on their path to transformation, emphasizing the importance of a strong foundation, strategic alignment, and a bold, innovative vision.

Amanda Wiltshire-Craine, Senior Vice President and Head of Global Customer Services at PayPal, is a forward-thinking leader committed to personalizing customer experiences through technology and innovation. In her keynote address, she will discuss PayPal’s approach to customer service under the guidance of their new CEO and the brand’s ambitious three-year strategic plan to radically transform service delivery and operations.

With a focus on personalization and automation, PayPal is setting new standards for customer interactions. It is leveraging data and advanced technologies like AI and GenAI to become more predictive in how the organization surprises and delights its customers. Amanda will illuminate the journey toward completely reimagining servicing as more intuitive, empathetic, and universally accessible.

She is deeply passionate about diversity, equity, and inclusion as a leader, particularly in promoting leadership opportunities for women and people of color. Amanda aims to leave a legacy that empowers the next generation of leaders, making meaningful progress toward a more inclusive C-suite landscape.

Join Amanda to explore how PayPal’s innovative approach to service transformation can inspire your strategies for integrating technology with human-centric customer interactions.

Join us for an enlightening keynote with Emily Sarver, Vice President of Customer Experience at Lovevery, a subscription-based early learning company focused on child development. The brand, known for its dedication to social and environmental impact, has over 350,000 active subscribers and was named one of Fast Company’s Most Innovative Companies in 2024.

Emily is an intentional, dynamic, and forward-thinking leader passionate about building and scaling world-class support teams. She leads from a place of conviction, characterized by a commitment to continuous improvement and a clear vision for the future. During her keynote, she will delve into the CX strategies that have fueled Lovevery’s growth and innovation, including using every interaction as an opportunity to build a meaningful relationship.

You’ll gain an understanding of how acting for the good of society and the planet and having purpose-driven leadership, along with a people-first culture, can help scale operations globally and advance a brand’s reputation while maintaining a solid customer-centric focus.

Attendees will walk away with actionable insights and be inspired to implement purpose-driven leadership within their own organizations to achieve operational success and make a meaningful impact.

Lead your contact center into a future where AI and human agents work together seamlessly. COPC will provide a comprehensive strategy that addresses the technical and emotional aspects of AI adoption.

Using the COPC AI Impact Change Planning tool, participants will gain practical strategies to analyze the impact of AI on agents and create a plan to navigate AI integration. Leaders will learn methodologies that ease the transition, improve agent satisfaction, and increase adoption.

Facilitators will begin by discussing how AI is changing the agent role, followed by an interactive session to methodically analyze the impact of AI in critical areas such as:

- Agent skills and knowledge requirements

- Recruitment and training

- Agent metrics and performance management

- Staffing requirements

- Agent perceptions of AI

This session will culminate in a discussion that brings this information to life to facilitate agent adoption. Leveraging the COPC AI Impact Change Planning tool, you will walk away with a strategy that transforms agent experiences with AI, making their jobs better and more fulfilling.

Are you ready to unlock the full potential of your customer experience strategy by putting your employees at the heart of it all?

OP360 has discovered a better way to achieve industry-leading employee satisfaction and retention rates. With a voluntary attrition rate less than half the BPO industry average, their approach to employee engagement is truly employee-motivated.

In this interactive workshop, you’ll discover how to:

- Create a workplace culture of empowerment that inspires employees to make the best decisions for customers

- Implement AI-enabled technology solutions that free up employees’ time, allowing them to focus on process improvement and identify areas of concern

- Utilize our proven Employee Engagement 360 (EE360) Program to strengthen relationships and connections within your team

- Boost employee satisfaction through recognition, health and well-being initiatives, diversity and inclusion programs, and corporate social responsibility

You’ll leave this session with practical strategies to humanize your employee engagement and customer experience. Get ready to learn a better way to nurture happy, healthy, and resilient employees, which will lead to equally happy customers and a thriving business.

Strategic outsourcing can transform businesses by providing unmatched scalability and access to global talent and deep industry expertise. Despite its advantages, misconceptions like loss of control, quality decline, and remote team issues deter companies.

Driven by operational and financial constraints, Public Storage collaborated with OP360 to explore offshoring as a strategy for achieving customer experience and operational excellence.

This fireside chat will dive into the hurdles, challenges, and ultimately benefits that come with offshoring through the eyes of first-time outsourcer Public Storage. We will unbox their journey including:

- The driving force behind the outsourcing decision.

- The partner model and strategic approach.

- Achieved outcomes compared to initial expectations.

Join us for a workshop focused on how modern AI tools and Analytics are helping enterprises big and small establish the operational building blocks designed to breed a culture of trust and excellence. Learn about ways to distill and protect your data, while also being able to leverage that data across your operations to effectively coach and mentor your frontline, measure the effectiveness of your coaching process, and build a healthy and committed culture of continuous improvement at all levels.

Every business speaks to customer satisfaction and values what a CSAT measure can tell them, but the most significant contributor to customer loyalty sits inside of your own organization. Your corporate culture dictates your customers’ satisfaction. What happens inside of your own four walls is as important as what occurs outside of them. Hear from the President and CEO of one of the nation’s most respected brands on how he fosters culture to drive satisfaction.

Philip Walker is the President and CEO of AAA Life Insurance Company, the life insurance business supporting the vast AAA membership – one of our nation’s most recognized membership organizations with over 60 million members.

Philip is a senior executive with 30 years of experience building and growing strong financial services companies. He will share how he delivers value to AAA’s membership base by understanding their needs and inspiring his team to develop a service-mindset. His vision to foster this corporate culture to serve the AAA member has grown the life insurance business and created loyalty within the overall AAA membership.

Join this live, power-packed session to see how AI-powered learning technology’s power has transformed the contact center training landscape forever. Training expert Casey Denby will deep dive into proven learning theory that has been incorporated into modern-day AI-based technology to scale learning efficiently and cost-effectively.

You don’t want to miss this session if you want confident, prepared, happy agents! Casey will share how leading brands such as United Healthcare, Bank of America, Verizon, Capital One, Prudential, Airbnb, Comcast, and many more have transformed their frontline training programs and found success via AI-Powered Simulation Training. Create the most lifelike, unbiased roleplay experience for your agents: it’s fast, flexible, and scalable.

In this session, you will:

Learn exactly what customers want from your brand and how you can best prepare your frontline agents to deliver through:

- Hands-on AI-Powered Simulation Training

- Understanding of ‘how people truly learn’

- Proven learning strategies you can take home and implement right away

- A live simulation build and practice that you can get your hands on and experience for yourself

Experience first-hand the potency of AI-Powered Simulation Training, as Casey shares:

- How to build a real-life simulation live

- Where GenAI is leveraged in the build

- A real simulated call scenario that you can practice live

In the dynamic world of technology, conversational automation has emerged as a groundbreaking tool, captivating industries with its potential to create measurable positive outcomes in customer engagement. This Tech Forum session offers a unique opportunity to delve into the success story of Home Depot, a trailblazer in redefining customer experience through the adept use of Large Language Models (LLMs) in a manner that is both safe and compliant.

We will explore the intricate distinctions between Retrieval Augmented Generation (RAG) and LLMs, drawing valuable lessons from Home Depot’s journey. Their approach exemplifies how conversational automation demands more than just guidelines and guardrails; it requires a strategic, transformative mindset crucial for achieving success in this domain.

Key takeaways will include:

- Confidence in Automation: Understand how embracing conversational automation in customer service can lead to significant benefits, offering a high return on investment. This segment will showcase concrete examples from Home Depot’s journey, highlighting key outcomes around containment and call resolution rates.

- Practical Roadmap: Gain insights into initiating and navigating the journey of conversational automation. We will dissect the essential considerations and steps necessary to implement this technology effectively, drawing from real-world experiences and the successes and challenges encountered by Home Depot.

- Understanding the Urgency to gain better Outcomes: Grasp the potential repercussions of delaying the adoption of conversational automation. The session will emphasize the urgency of integrating this technology to stay competitive and relevant in the rapidly evolving tech landscape.

- AI Fundamentals: Learn key differences between Retrieval Augmented Generation (RAG) and LLMs and why they matter to you as a leader.

This enlightening keynote address will dive into the transformative impact of customer-centric strategies on business success. In this engaging talk, Tracy Sedlak, VP of Customer Success at Offerpad, unravels the pivotal role of harnessing Voice of the Customer data in shaping robust business strategies, fostering a culture of customer-centric excellence, and embracing the power of stepping outside our comfort zones to glean unparalleled insights from customers.

Discover how these invaluable insights are not just indicators but catalysts for innovation and growth, driving organizations toward a future where understanding and leveraging the customer’s voice are at the heart of every strategic decision.

You can hear more about Tracy’s brand story and leadership journey at CRS Tucson.

The panel discussion delves into the intricacies of launching and sustaining AI-driven customer experience (CX) initiatives. The conversation will explore the landscape of AI implementation, emphasizing the elements necessary for successful deployment and continual support. Hear from Brands that have launched their AI strategies on what challenges they faced, the strategies they used, and the vital components they have planned to nurture and evolve their AI-based CX solutions beyond their inception. Get answers to real questions!

- What skills or roles are needed to stand AI up?

- What skills or roles are needed to sustain it?

- Where does AI for CX sit in the enterprise? Customer Service, IT, Product Management, or a brand new sub-team?

In the fast-paced world of customer support, where quick responses and resolutions are crucial, the arrival of AI has been nothing short of revolutionary. Among the champions leading the charge in AI-driven customer support, AppFolio stands out. AppFolio is an Intercom customer that provides software applications and services for the real estate industry.

This session will dive into how innovative companies like AppFolio structure their customer service teams in an AI-first future. In particular, we’ll uncover how Appfolio was able to adopt this new technology and achieve substantive results quickly, including:

- Increasing their self-serve rate and automatically resolving 30% of customer inquiries using AI

- Decreasing overall response times and time to resolution

- Increasing trial conversions through proactive support

Prioritizing enhanced consumer experiences is paramount for any consumer-facing brand. By integrating AI and automation, businesses can ensure timely and thoughtful responses to online consumers, freeing up resources to focus on efficiency, quality, and meaningful human interactions.

Join Kim McMiller, SVP Global Client Services at IntouchCX, and Luc Antoine, Head of Care at L’Oréal Canada, as they delve into how to harness automation for data integrity, aligning seamlessly with a global vision and deep understanding of consumer needs. This engaging workshop will highlight real examples from a top consumer brand and offer the unique opportunity to collaborate amongst your peers on leveraging automation, people, and processes to optimize operational efficiency and enhance the consumer journey.

AI is taking center stage when it comes to elevating customer service experiences. When we mix the smart capabilities of AI with the kind of empathy only humans can provide – that’s where the magic happens for both companies and their customers.

Our workshop will explore strategies combining the best technology with genuine, empathetic customer service to create experiences that truly differentiate and impact customer loyalty. Attendees will gain practical tips for prioritizing customer needs and emotions, ensuring seamless, personalized service that bridges the gap between digital efficiency and the human touch.

Sentiment… Language… Speech… QA… We all reference these terms, but do we know how to place tangible value on the human voice expressing such communication?

With the numerous human-based metrics used to manage corporate performance, most contact center operations have focused their investment decisions on advancements that remove the human component.

Yet, measures involving VoC, Promoters, and QA remain prevalent throughout all organizations. Many executives have sought to learn the true ROI involving sentiment… a high QA score… or even Top Box satisfaction. For many, attaching an ROI to these metrics appears daunting, moving them to a ‘necessary evil’ expense line. In the age of AI, we find ourselves pushing for investment success associated with corporate efficiency and reduced headcount, but how about the same rigor toward retaining humanity in our enterprises? Societal research continues to show that people resonate with and need personal connection. The relationship between our customers and brands should capitalize on that need while continuing to foster satisfaction with product offerings.

Hear from industry-leading executives who have capitalized on humanity’s power and created tangible business results. Then let’s collaborate as a Community to define what tangible success means for our Humanity Factors. Facilitated break-out tables will focus on customer sentiment, agent behavioral factors, customer satisfaction, and the difference between proactive and reactive communication analyses to design the next generation of ROI models as we embrace the AI era.

Dive deep into the future of financial services with Veronica Semler, Vice President of Servicing Operations at Oportun, as she demystifies the complex interplay between Generative AI and big data in revolutionizing the industry. Oportun, a distinguished FinTech provider committed to making financial goals achievable for over 2 million members, harnesses cutting-edge AI to reimagine customer service dynamics and operational efficiency.

This session promises a thorough dissection of AI-driven strategies reshaping how data is interpreted, consumed, and leveraged for decision-making. Witness firsthand how Oportun’s leaders, supervisors, and agents integrate these advancements to foster a culture of innovation, significantly enhancing customer experiences and driving financial success.

You’ll gain insights into:

- How consumable data eliminates the need for context switching and revolutionizes coaching and QA practices

- Real-world examples of how actionable insights derived from AI analytics empower staff at all levels to make informed, strategic decisions.

- The ethical considerations and governance of AI in managing sensitive financial data.

Consumer expectations for customer service are higher than ever before – they expect to engage with businesses across multiple digital channels that are convenient, fast, and efficient. Join Amazon Web Services (AWS) to discover best practices for how to leverage phone, in-app and web calling, video, chat, short message service (SMS), and third-party messaging to deliver exceptional customer experiences.

In this session, you will learn about:

- Executing a true omnichannel strategy that delivers consistent customer experiences and better outcomes

- Empowering customers with self-service solutions for increased speed of service

- Driving high-velocity innovation in your contact center to improve your customer experience

Navigating Moments of Truth: A Human-Centric Approach to Employee Engagement and CX Excellence

Join Intradiem’s President and COO, Jennifer Lee, as she leads a panel of industry experts in a discussion that extends beyond the traditional focus to explore the heart of employee engagement, uncovering genuine “Moments of Truth” for both employees and the brand.

As technology continues to impact the type and complexity of live calls, employee experience must remain a focal point. CX leaders must reexamine the agent’s role and establish targeted, effective employee engagement support mechanisms to ensure agents are best equipped to deliver exceptional “Moments of Truth” in these new modern customer interactions.

The conversation will navigate the intersections between these pivotal moments, revealing how mutual support shapes a powerful synergy. Discover how these moments profoundly impact CX and explore strategies for combining technology and acknowledging the human touch to positively influence your “Moments of Truth.”

Key panel takeaways will include:

1. How to find and define your “Moments of Truth”

2. The mechanisms needed to modernize and streamline agent workflow

3. How to put training at the center of your employee experience and engagement

4. Taking a proactive approach with technology to enable a better EX

Are you wondering how to incorporate AI and Automation into your program effectively? Need help figuring out where to begin or how to create a compelling business case? Join Laivly along with Abercrombie & Fitch as they share insights on navigating the new landscape of AI technology in CX. Learn how to fund your tech projects based on cost savings and ROI from the first interaction.

Discover how to identify that crucial first step and develop a persuasive investment story. Learn from real-world experiences where an unexpected, high-value, compliance-based use case was the foundation for broader AI and Automation implementation.

In this discussion, you’ll gain practical tips on:

- Finding early ROI opportunities

- Crafting a smart expansion plan

- Future-proofing your AI strategy

Join us and embark on the path to AI and Automation success!

Today’s service delivery landscape largely focuses on technology-driven solutions thanks to emerging channels, cutting-edge tools, and AI-powered insights. But without a human-centric approach, you risk losing the emotional connection and empathy needed to unlock the value behind your investments.

Real transformation requires a human-centered design to succeed, so ensuring your service delivery approach is modeled around (and for) people is crucial to delivering outstanding customer experiences. In this session, Tadd McAnally, VP of CX Advisory services at VXI, will discuss prioritizing the human element by applying design-thinking principles backed by real-world examples and success stories in an increasingly complex ecosystem.

Join this compelling Customer Shop Talk to learn:

- How to assess your service delivery model and its maturity level

- How to ensure your transformation roadmap is collaborative, agile, and human-centric

- How to synchronize your entire service delivery network around your transformation efforts

Don’t miss out on this important session — or the opportunity to design with your people (not just bots) in mind.

Dive into the cutting-edge of AI and revolutionize your customer experience (CX). Whether you’re just starting or already on the path, this is your invitation to a game-changing dialogue on AI’s role in elevating both customer and agent interactions.

Discover the stark contrast between legacy architectures and the dynamic future promised by AI-infused technologies. Learn about AI’s power to seamlessly assist with post-call enhancement, provide next-best action recommendations, IVR and dialog flow customization, and enrich your knowledge base.

Join us to explore how wrapping AI around your current IT infrastructure can drive intelligence, foster innovation, and inject agility into your operations. This is more than a chance to upgrade your technology—it’s a pathway to reshaping your organizational landscape and redefining the CX you deliver.

A customer doesn’t think about channels. Some communicate only on one channel; some effortlessly flit across channels. But brands and their Customer Experience (CX) leaders don’t have that luxury. They must manage preferences across all their customers while trying to strike the balance of service excellence, operational costs, and an array of automation and artificial intelligence (AI) capabilities across channels.

How do brands manage the tension between serving customers however they want, in whatever channel they want, and proactively steering customers to channels to manage customer experience and efficiency?

This panel will dissect modern frameworks for crafting effective customer channel journeys. We’ll delve into customer behaviors across channels and the importance of fostering lifelong conversations that don’t just span channels but span over the entire customer relationship, moving beyond mere transactions. Insights from diverse brands will shed light on the evolving strategies and critical decisions in the era of customer experience AI and automation.

Join us as we explore the seamless channel experience. Our focus will be on how brands can balance operational efficiency and customer-centricity in the digital age, underscoring the need to delight customers, build meaningful connections, and drive lifelong customer loyalty in the constantly evolving customer service landscape.

It’s often assumed that a decline in customer experience pays for gains in operational efficiency. That’s not the case for Bob’s Discount Furniture. They are leaders in both operational efficiency and NPS.

A customer-obsessed culture and great product value are the foundation of Bob’s brand. But more importantly, Bob’s also leverages digital channels and Generative AI at strategic points in their customer journey, allowing them to minimize phone contacts while still getting customers the information they need. It’s a win-win, offering great CX while minimizing costs.

Join a conversation with Quiq and Bob’s to learn:

• Insights into the techniques that Bob’s used to drive CX efficiency and the outcomes they achieved

• Understanding of the Generative AI Maturation Curve that Bob’s is using to plan the adoption of safe Generative AI

• Suggestions about how to navigate organizational challenges, risks, and barriers to AI “

Even the most reputable companies strive for constant improvement in today’s fiercely competitive business landscape. As technology reshapes traditional operations, it has become imperative for organizations to foster consistency, increase efficiencies, and create a high-performance culture. Join us for an insightful session featuring Lance Gruner, Mastercard’s Executive Vice President of Global Customer Care, and Kyle Kennedy, President and CEO of COPC Inc. They will reveal how Mastercard’s commitment to excellence led them to adopt and implement a unified performance management framework. Lance will share Mastercard’s impressive journey and its sustained impact on the organization and its customers. Don’t miss out on the chance to hear Mastercard’s story of continued success in the face of rapid change, solidifying its leadership position in the CX industry.

In today’s tech landscape, the promise of conversational automation has captivated industries, offering unparalleled opportunities for customer interaction. Join Knowbl as we delve into the transformative potential of Large Language Models (LLMs) and their role in fulfilling this long-awaited promise.

This Tech Forum session aims to dissect the fundamental distinctions between RAG (Retrieval Augmented Generation) LLMs and shed light on the nuances that differentiate these technologies in conversational AI.

Moreover, we’ll be navigating the critical compliance landscape within conversational automation. While guardrails are essential, the Knowbl team will illustrate why a compliance-approved approach necessitates more than mere guidelines, showcasing how Knowbl tackles this challenge effectively.

Amidst the conversational AI buzz, this Tech Forum seeks to transcend the hype.

Attendees will:

- Learn why leading brands are embracing this technology’s solutions.

- Uncover tangible applications, success stories, and real-world scenarios where these solutions have reshaped customer experiences for enterprises.

- Understand the differences between RAG and LLMs.

- Grasp the potential of LLMs in transforming how customers interact with brands, marking a significant shift in the dynamics of customer-brand relationships.

This enlightening keynote address will dive into the transformative impact of customer-centric strategies on business success. In this engaging talk, Tracy Sedlak, VP of Customer Success at Offerpad, unravels the pivotal role of harnessing Voice of the Customer data in shaping robust business strategies, fostering a culture of customer-centric excellence, and embracing the power of stepping outside our comfort zones to glean unparalleled insights from customers.

Discover how these invaluable insights are not just indicators but catalysts for innovation and growth, driving organizations toward a future where understanding and leveraging the customer’s voice are at the heart of every strategic decision.

You can hear more about Tracy’s brand story and leadership journey at CRS Tucson.

When you love the great outdoors, it makes sense to try and do everything you can to protect it. That’s why Arc’teryx, the high performance outdoor equipment brand, is doing its part to consider its environmental impact every step of the way. The brand is committed to incorporating sustainability and circularity into every business decision, making them integral to its core business.

Join Dave Pitsch, Vice President of Guest Services at Arc’teryx, as he addresses the brand’s unwavering commitment to delivering a premium guest experience and investing in its guests throughout the entire lifecycle of its products. The brand’s emphasis on performance, attention to detail, and dedication to environmental responsibility has garnered a strong reputation among outdoor enthusiasts.

Learn how the brand is shaping the future of guest experience, addressing crucial moments of truth, and aligning its product design, circularity initiatives, and guest services to cater to the evolving needs and expectations of its guests.

Simplifying Contact Center Processes to Drive Cost Savings and Better Experiences

The contact center industry plays a pivotal role in delivering efficient customer service and support across various sectors. However, operating a contact center can be costly, primarily due to the highly labor-intensive nature of its operations. To address this challenge, organizations are increasingly turning to automated process flows to optimize their contact center operations and achieve significant cost savings.

Join us as we share the remarkable transformation journey for PSCU, the nation’s largest credit union service organization, and how they leveraged AI and automation to simplify hundreds of workflow processes and eliminate their complicated knowledge base—all while boosting agent performance and efficiency.

By streamlining and automating data retrieval and consolidation, knowledge management, and issue escalation processes, agents can dedicate their expertise to more complex, value-added tasks. This not only amplifies their productivity but also elevates customer satisfaction by ensuring faster and more precise resolutions.

In this session, Kim West, VP Product Marketing from Uniphore, will share invaluable lessons and key takeaways to:

- Harness the power of AI to automate knowledge search and time-consuming tasks

- Ensure compliance with industry regulations through guided workflows

- Drive retention by empowering agents to focus on the customer experience

Creating a Healthy, Tech-Empowered Workplace

Technology is most effective when it’s used in the service of human beings. Join Intradiem President Jennifer Lee for a customer case study featuring Jim Simmons from Synchrony Financial. Jennifer will discuss technology-driven strategies to boost employee well-being and performance, and Jim will explain how Synchrony Bank is leveraging automation along with Thrive’s science-backed behavior change platform to provide more human-centric support to its contact center agents. Arianna Huffington, founder and CEO of Thrive Global, will share via video how Thrive embeds employee well-being into everyday workflows, which lowers stress, builds resilience and improves performance.

The Lifelong Conversation: How to Make Customer Service Your Business Using a Seamless Channel Experience

In today’s competitive business landscape, customer service has become the ultimate differentiator. It’s no longer just about resolving issues; it’s about creating meaningful connections that foster loyalty and advocacy. Channel shouldn’t matter when it comes to delighting customers. The modern customer service team needs to meet customers where they are without having their information siloed across tools or channels. This panel discussion will bring together industry experts, thought leaders and seasoned practitioners to explore effective strategies and best practices for cultivating lifelong conversations with customers. Through engaging dialogue and insightful anecdotes, the panelists will highlight the key elements contributing to making customer service the centerpiece of your business by establishing a seamless channel experience that transcends traditional boundaries.

The Era of Intelligent CX: Opportunities and Challenges

We all see it coming, an AI revolution that is more than just hype. However, figuring out how to make it work smoothly for customer service and finding the best way to learn, evaluate, and put it into action is not a walk in the park.

Join us as we examine the opportunities for leveraging AI to better understand, shape, customize, and optimize the customer journey and your overall CX. We’ll share real-world success cases and even a few cautionary tales of when things didn’t go quite as planned.

During this Shop Talk, you’ll leave understanding:

- The unique requirements and considerations for applying AI in a CX setting.

- Ways to identify opportunities for AI to bring real value to your CX organization, in hours and not years.

- The future role of an agent, and how their interaction with the customer will change in the short, medium, and long term.

Digital First, Member Always: How Navy Federal Credit Union Brings this Mantra to Life

Navy Federal Credit Union (NFCU) has always been focused on providing member-first service to more than 12 million global customers, and their frequent recognition by Forrester, KPMG, and JD Power reflects that emphasis. With the advent of more advanced technology, NFCU has worked hard to strike a balance between the high-touch interactions and digital journeys they provide to members. To guide this work, NFCU adopted a Digital First, Member Always philosophy.

In this session, we will share a glimpse into some of the ways the NFCU customer experience teams are bringing this mantra to life. Starting with the fundamentals, we will discuss key initiatives that are improving the member’s digital journey.

You will learn about:

- How NFCU got started on this journey and how the philosophy drives the organization’s focus

- Some of the new capabilities and processes NFCU has launched to achieve this balance and recent results that highlight success

- Future plans for additional digital self-service capabilities, including expansion of multi-channel bots and large language models (e.g., ChatGPT)

We hope you will join us to hear about NFCU’s exciting journey as they deliver compassionate service that strives to always put the member first!

Leading Across Generations: Understanding and Embracing Differences in the Modern Workforce

As the workforce grows and diversifies, leading across generations has become more challenging than ever before. There are now five generations in the workforce (Silent Generation, Baby Boomers, Generation-X, Millennials, Generation-Z), each with unique values, communication styles, work preferences, motivators, and technology habits. This can lead to misunderstandings and incorrect assumptions among colleagues, making it critical to welcome different perspectives and use them to lead effectively.

In this workshop, COPC Inc. will present 2023 research that illuminates the reality of generational divides in contact centers and customer experience operations.

Attendees will:

- Learn the characteristics, preferences, and drivers of each generation and their impact in the workplace

- Discuss how to gather similar insights and data from their employees

- Uncover effective leadership methods for each generation, while understanding the importance of individual management styles for each person

- Create a leadership enhancement plan based on shared research and best practices (participants can bring their own data if they have it)

- Share thoughts on how to ensure generational awareness is spread throughout the management chain down to frontline management

Join COPC and your peers to learn how to transform your leadership approach and create a culture that welcomes generational differences. Leave this session with a better understanding of how to lead and manage each generation while maintaining individual strategies for maximum success.

SmileDirectClub Facility Tour

Join Execs In The Know on Friday, September 22nd at 2 PM for an exclusive behind-the-scenes tour of SmileDirectClub’s manufacturing facility, dubbed the SmileHouse.

Your host Alvin Stokes, SmileDirectClub’s Chief Customer Contact Officer, will share how this disruptive brand streamlines the process from customer engagement to purchase decision and innovates the CX journey for their customers. He’ll delve into the fascinating connection points between customer experience and the SmileMaker Platform, which uses advanced AI technology via an app to put game-changing innovation in the palm of consumers’ hands.

Be a part of this tour to witness firsthand the impressive 3D printing technology, observe the seamless production of the brand’s clear aligners, and explore how using the power of innovation can up-level your customer experience. We can’t wait to see you there!

Unleashing the Power of Customer Data: How iRobot Leverages Customer Care to Build Lasting Relationships in a Competitive Tech Landscape

As a leading robotic vacuum cleaning company, iRobot prides itself on being a mission-driven builder that is revolutionizing the way the world cleans with consumer robots. In this thought-provoking keynote, Ledia Dilo, VP – Head of Global Customer Care and Fulfillment at iRobot, will address how the brand leverages customer-centric strategies to drive sustained growth, optimize operations, and build customer loyalty in an industry currently challenged by slowing demand, growing competition, and supply chain cost.

With a focus on optimizing operations, she’ll unveil the four strategic pillars that drive iRobot’s customer interactions, streamline onboarding experiences, and transform the contact center into an insights-driven and strategic business unit. Additionally, she’ll highlight the crucial role of the care team, their technical training, and collaborative partnerships with engineering in addressing complex product issues. Find out how iRobot leverages customer data and proactively engages with consumers to build lasting relationships. Drawing on her extensive experience, inspiring anecdotes, and metrics showcasing the success of proactive initiatives, she will demonstrate the immense value of customer-centricity in shaping the trajectory of brands to deliver win-win outcomes.

Join Ledia to gain insights on iRobot’s remarkable legacy that will inspire you to reshape your brand’s customer experience, unlock new growth opportunities, and cultivate enduring customer loyalty.

Size Is No Barrier: How Brands of Every Scale Are Navigating the AI-CX Landscape

Join our panel of experts as they delve into the world of artificial intelligence (AI) and its potential transformative impact on customer experience (CX). The revolutionary capabilities that AI offers enable brands to drive meaningful change by delivering efficiency, hyper-personalization, predictive insights, and seamless interactions to just name a few.

This thought-provoking discussion will shed light on a wide range of perspectives, various use cases, and challenges and opportunities that are specific to an organization’s size and structure when deploying AI in the current CX landscape. Our panelists will also share how they are navigating critical considerations, such as ethics, data privacy, and striking the right balance between automation and maintaining a human touch.

LOOP Insurance Replaced Their Chatbot with Generative AI and Resolution Rates Soared!

The latest AI has the potential to automate far more customer inquiries than prior generations of conversational AI. While most people are familiar with ChatGPT, few have deployed Large Language Models in customer-facing applications.

LOOP Insurance is excited to share their journey from a simple chatbot to a state of the art LLM-powered AI assistant that has yielded an impressive increase in effectiveness and CSAT.

In this session you will learn:

- How LOOP’s new AI Assistant is remarkably better than its prior generation chatbot

- What resolution rates and outcomes LOOP achieved

- How LOOP was able to harness the power of LLMs to serve customers safely

From Ordinary to Extraordinary Customer Support: A Real-World AI Success Story

Witness the transformative journey of iPostal1, the worldwide leader in digital mailbox technology, as we explore the extraordinary impact of artificial intelligence (AI) on customer service. Due to rapid growth and an influx of customers, the brand was challenged to rethink how it could maintain its high standards for an exceptional level of service.

Armed with innovative solutions and a dynamic suite of tools, iPostal1, was able to scale its operations and take control of how it managed relationships with customers without diminishing the quality of its customer service.

Join us to hear from Dan Medina, Director of Customer Service Operations at iPostal1, and Colin Crowley, CX Advisor at Freshworks, to learn how AI can empower your customers, agents, and leaders alike by:

- Deflecting queries and automating repetitive tasks

- Building efficiencies to enable improved customer resolution times and retention

- Saving agents time and getting to contacts in under a minute

- Equipping teams with valuable insights to improve operational efficiency and focus on what matters most

Achieving Cultural Alignment: Neiman Marcus Sets the Stage for Optimal Strategic Partnerships

The cultural alignment between your organization and your BPO partner has become an essential ingredient to drive performance, speed to proficiency, and a consistent customer experience across locations. When cultural alignment is measured, monitored, and continuously calibrated, both companies arrive at a greater understanding of each other’s beliefs, communication processes, and abilities—functioning with truly dynamic collaboration.

In this session, learn how Qualfon and Neiman Marcus’ cultural alignment has resulted in the ultimate strategic partnership.

- Measure your company culture using internal NPS and surveys

- Integrate your company culture into your BPOs training curriculum to produce brand ambassadors

- Discover the results that are revealed with a culturally aligned partnership

The Future of Work: Navigating the Change Curve

In a rapidly changing world, the landscape of work and business is undergoing unprecedented transformations. During his keynote, Peter Mallot, Worldwide Support Leader for Modern Life and Business Programs at Microsoft, will provide an informed perspective and timely insights at the intersection of Workforce Strategy, Culture, and Technology.

The future of work is progression, not an overnight solution. It demands a shift in corporate culture, management philosophy, and workforce adaptation, where the focus moves from reactive to proactive, from support to achievement. The path forward lies in a delicate balance between leveraging technology’s capabilities and empowering people toward greater productivity, collaboration, flexibility, and automation to enable customers to achieve more.

Join Peter to gain a deeper understanding of the engine required to drive change for an organization’s most valuable assets — its teams and its customers.

Behind the Screens: How MoviePass Is Redefining Customer Experience and Building a Moviegoer Community

In this engaging keynote address, Stacy Spikes, CEO of the nationwide movie subscription service MoviePass, will take you on a journey where customer-centricity and innovation converge to create immersive and personalized cinematic experiences for all.

Discover how MoviePass is reinventing itself post-bankruptcy, overcoming past challenges, embracing product and customer service improvements, and setting its sights on a new horizon. From navigating customer churn to building customer trust and loyalty, Stacy will share real-life examples of the pivotal moments and invaluable insights that are shaping the brand’s trajectory.

Learn why diversity and building community are not just cultural values, but smart business moves that lead to better products and greater market reach. Additionally, Stacy will delve into the potential of blockchain technology as a powerful tool to bridge the gap between moviegoers and filmmakers.

Join us for this thought-provoking and inspiring look into the future of cinema and Stacy’s ambitious plans for reinventing moviegoing for customers.

Finding the ROI of Your CX Vision

As CX leaders, we recognize that enabling a customer-centric vision holds immense potential for driving value for our organizations.

Join our esteemed panel of industry experts as we explore how CX is used to drive revenue opportunities and strong business value. The panel will unlock the key KPIs and performance metrics that leaders must be measuring and monitoring to validate and amplify the value of their CX vision. With an ever-changing technology landscape alongside evolving customer expectations, we will explore the future impacts of CX ROI and discuss the influence it will have on what we measure.

From this session you will take away:

- The CX outcomes that can drive value to your organization.

- Effective KPIs and performance metrics that demonstrate the value of your CX initiatives.

- Considerations on how to measure and evaluate your future state of CX.

What Is a Digital Worker?: Harnessing ChatGPT and Automation to Create New Strategies in CX

Enter digital workers, where AI and automation meet to support employee and customer experiences. They are changing our understanding of the future of work.

To support human employees, digital worker technology will continue to advance capabilities. The unique capabilities of Generative AI and automation are enabling CX leaders to gain a deep understanding of customer preferences, behaviors, and pain points.

Join us as we explore a real-world example of how a leading brand harnessed digital workers to revolutionize its contact center operations.

During this Shop Talk, you’ll discover:

- How digital workers can connect with customers by performing tasks guided by the power of Generative AI technology to provide consistent, on-brand CX.

- Ways to layer this technology onto your existing systems to overcome traditional tech blockers and modernize your customer care strategies.

- The tangible outcomes achieved by real-world clients, including accelerated productivity, exceptional first-contact resolution, and elevated customer satisfaction.

- How to leverage this technology in new ways to enhance agents and scale up customer service

Data-Driven Customer Insights: Leveraging Analytics to Anticipate and Exceed Customer Expectations

As artificial intelligence (AI) and machine learning (ML) technology evolve, contact centers are using them to transform customer experiences through agent assist, self-service, and conversational analytics capabilities.

Join Amazon Web Services (AWS) and Truist to discover best practices for how to leverage AI-powered technology to deliver exceptional customer experiences. In this session, you will learn about:

- Executing a connected channel strategy that delivers consistent customer experiences

- Leveraging AI/ML for real world outcomes like better self-service, agent assistance, and conversational analytics

- Turning data into insights that drive continuous improvement of your customer experience

The Agent, the Data, and Technology: Three Pillars for Enabling CX Personalization

With the global CX personalization market forecast to hit $11.6 billion by 2026, it is imperative that brands embed true personalization opportunities across the service journey. Facilitation begins with bi-directional omnichannel engagement that will require cutting-edge technology, insightful analytics, and advanced agent enablement.

This incredible panel will showcase agents alongside their business leaders in what is sure to be a powerful conversation on the approach needed to radically transform customer relationships. Join us as we unpack and explore critical insights from two unique perspectives on:

- What agents need to enable next-level personalization

- The influential role of agent empowerment in reaching CX goals

- How advanced analytics will unlock new opportunities

- Reaching the goal of moving Customer Service from a cost center to a profit center

- The role of technology and how it sets frontline agents up to create even more meaningful relationships with customers

Ignite the Leader Within: Embracing the Power of Inspiration for Extraordinary Results

Inspiration is a catalyst for greatness. It transcends the mundane and ordinary, breaking through conventional boundaries to unleash the full potential of individuals and teams. In this captivating keynote, Carolyne M. Truelove, Vice President, Reservations and Customer Relations at American Airlines, will unveil the immense power of inspiration and its ability to ignite a drive for exceptional results and lead transformative change.

Discover the extraordinary possibilities that lie within you as a leader. Everything a leader does requires connection, including vision, strategy, and execution. Learn the art of connected leadership, fostering a culture of open-mindedness, and leveraging your leadership to navigate through the ever-evolving business landscape. When inspiration permeates every aspect of an organization, magic happens. Join Carolyne for an unforgettable keynote and unlock the secret to inspiring others into action.

Building Trust in Generative AI

Generative AI brings forth a new realm of possibilities for enhancing the customer experience. However, its propensity to occasionally deliver inaccurate or nonsensical information — a phenomenon known as a hallucination — could potentially impact hard-won customer loyalty. To ensure its success, generative AI has to solve problems and respond in a way that is accurate, helpful and free from toxicity and bias.

Join AI experts from TELUS International, a leading digital customer experience provider, for a Tech Forum exploring:

- The emerging technologies and processes that are being deployed to increase the accuracy of generative AI models.

- Potential legislation, similar to the AI Bill of Rights, being proposed in the wake of the generative AI explosion.

- Consumer perception of AI-generated content, including accuracy, completeness, attribution and age-appropriateness.

- And more!

How to Cultivate a Culture of Well-Being for a Resilient Workforce

Companies today require a robust well-being strategy to have a material impact on the growing crisis of workplace mental health and business resilience. Join us for this interactive session as we explore the Four Building Blocks of Emotional Intelligence. We’ll be exploring how to intentionally cultivate learning, development, and leadership strategies that focus on mindfulness and emotional intelligence, and how they are enhanced by dynamic neuroinsights and AI technology.

Key takeaways:

- Learn how well-being helps to drive retention, effectiveness, and efficiency gains throughout the workforce.

- How well-being technology programs leverage AI to assist with the timely delivery of well-being interventions.

- Ways to deeply integrate holistic system design and well-being practices across the employee lifespan.

The Conversational AI Divide

There is a great divide emerging in customer experience (CX) and leaders are being presented with the opportunity to blaze a trail forward with Conversational AI to set themselves apart in their service experience.

Conversational AI presents the opportunity for companies to harness new technology in a low-risk, high-impact environment. Embracing these new possibilities makes it possible for CX leaders to deliver enhanced and effective service at scale.

During this Tech Forum, you will be guided through the steps and considerations needed to build your very own AI assistant. Participants will walk away appreciating how simple and risk-free it can be to deliver big CX wins.

Join us as we explore:

- The universal presence of AI assistants: Understanding how AI assistants are becoming ubiquitous, shaping the future of customer interactions across industries.

- Building better customer experiences with reduced levels of effort: Discovering strategies to leverage Conversational AI to streamline and enhance the customer journey, delivering seamless experiences that require minimal effort from customers.

- The ease of creating your very own virtual AI assistant: Exploring the possibilities of building custom AI assistants without the need for extensive coding or AI expertise, opening new avenues for innovation and personalized experiences.

- Uncovering the distinct advantages and untapped potential that can be leveraged by embracing Conversational AI and staying at the forefront of this evolving landscape.

Don’t miss this opportunity to bridge the Conversational AI divide and unlock the full potential of AI assistants in revolutionizing your CX.

Improving EX in a Remote Workforce

In today’s remote work environment, organizations must not only hire the right talent but must also leverage technology to effectively manage and improve the employee experience (EX) throughout the agent life cycle.

To boost agent experience and reduce cognitive load, leading organizations are:

- Using job simulation previews to set realistic expectations

- Helping accelerate onboarding and training through micro-module learning and personalized AI avatars

- Managing agent stress and anxiety with rapid employee feedback and real-time reporting

- Applying generative AI across EX to drive improved satisfaction

In this 90-minute session, we’ll explore how to best empower remote employees and motivate them to foster collaboration amongst themselves as well as with managers. With effective strategies in place, companies will see higher ESAT, engagement, and retention from happy and productive team members.

AI Simulation Roleplay Training for Speed to Proficiency in the New Hybrid Workforce

As you build onboarding for new hires, getting your agents to a place of confidence and proficiency can often be a challenge, especially in a hybrid workforce environment.

In this interactive session, we will reveal key findings on CX preferences designed for today’s consumers and what experiences they truly expect when contacting your brand for support. Find out why driving higher speed to proficiency with new hires is the top metric for delivering the experience your customers expect and deserve.

The hybrid workforce is here to stay. Learn insights into why companies are losing customers and market share by not having effective onboarding. While companies shifted to ‘virtual learning’ due to need, most lack a cohesive and scalable training and onboarding strategy for new hires in a global hybrid workforce.

In this session you’ll learn:

- The most effective methods to onboard new hires in a global hybrid workforce

- Best practices from your peers that are leading the charge in driving speed to proficiency

- Key findings on CX preferences impacting your business

- How to best scale onboarding consistency and accountability

- Which innovative technologies can be leveraged to achieve key outcomes

JACK MEEK

Vice President, US Care Support Operations, GoDaddy

Jack Meek currently leads customer care operations at GoDaddy, the world’s largest services platform for entrepreneurs around the globe. GoDaddy’s mission is to empower their worldwide community of 20+ million customers — and entrepreneurs everywhere — by giving them all the help and tools they need to grow online. With 21M+ customers worldwide and 84M+ domain names under management, GoDaddy is the place folks come to name their idea, create a compelling brand and a great looking website, attract customers with digital and social marketing, and manage their work. Jack is responsible for developing and executing the future global care strategy that will continue to differentiate GoDaddy as the advocate of small business success throughout the world.

Jack is an influential change leader who has a passion for technology, innovation, and seeing people succeed. His foundational expertise and passion for building a solid employee and customer centric culture started at MCI where he was repeatedly recognized for record-breaking performance & leadership achievements. He then went on to help build and consolidate large-scale customer service and sales organizations at in the Telecom and Retail Energy space. Jack has successfully transformed and maintained customer operations and sales organizations of all sizes and across many different industries. He spent several years as a senior leader in the Business Process Outsourcing (BPO) industry helping multiple clients such as Verizon, Sony, and T-Mobile achieve their customer outcome goals.

JEFF MYERS

Vice President and General Manager Customer Care, SiriusXM

Jeff is a passionate advocate for Customers and designing frictionless experiences for them. He began his career in Marketing in 1991, as a call center agent, while attending the University of Wisconsin, Madison and studying Communication Theory and Research. The first eight years of his career included multiple call center operational roles. Following his first eight years in operations, Jeff spent 10 years in the Omnicom family in both the US and the UK/Europe with multi-channel responsibility for Client Services and Program Strategy in a variety of verticals including Telecom, Energy, Media and Non-Profit. He currently serves as the VP and GM of Listener Care for SiriusXM with responsibility for all live customer interactions. His scope includes partnerships with 16 BPOs, in nine geographies, representing more than 60 support center locations.

Bill Colton

Co-Founder and CEO, Global Telesourcing

Bill Colton is the Co-Founder and CEO of Global Telesourcing, a premium provider of digital and voice customer experience and sales solutions for some of the largest and most recognizable brands in the US. Using native-speaking English agents who spent their formative years living in the US, their workforce is as bi-cultural as they are bi-lingual. Global Telesourcing serves clients from centers in both Monterrey and Leon, Mexico, as well as work-from-home.