Are You Measuring CSAT for AI?

As AI becomes the face of more customer interactions, a critical question emerges: how satisfied are customers with their AI experiences, and are companies even measuring it?

by Execs In The Know

Artificial intelligence (AI) has shifted from a back-office enabler to the frontline. Whether it’s through virtual assistants, chatbots, or intelligent routing systems, AI is now a significant part of how customers experience a brand.

But as these touch points scale, a key question emerges: Are we measuring the quality of those experiences in a way that reflects their growing impact?

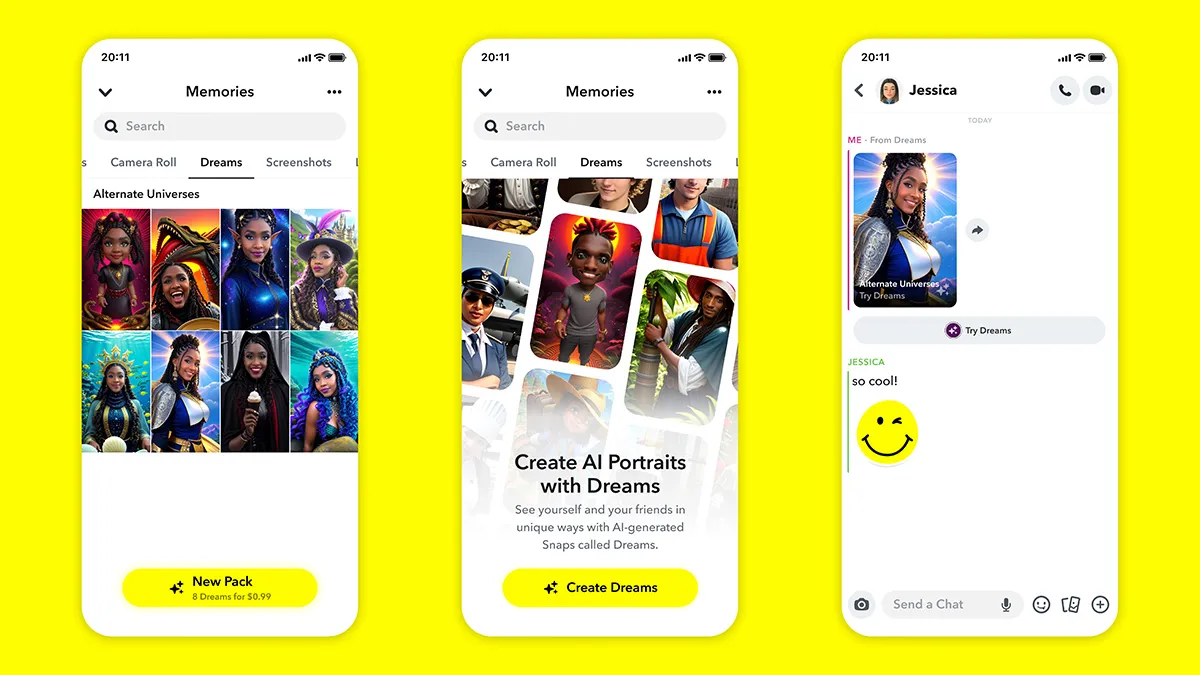

Customer satisfaction (CSAT) has long served as a guiding metric in service organizations. Yet many companies still assess it primarily through human-assisted interactions: calls, chats, or emails handled by agents. In contrast, AI-led interactions often fall outside the measurement framework. Not because leaders are neglecting them, but because the methodology is still catching up to the moment.

This isn’t a matter of right or wrong. It’s a reflection of where many brands find themselves: navigating new ground, exploring how to measure value in emerging digital channels, and asking the right questions along the way.

Rethinking What “Good” Looks Like

When AI was first introduced to customer experience (CX), its success was largely defined by operational wins, including reduced handle time, containment rates, and cost savings. These metrics are still important, but they tell only part of the story. A system can be efficient and still fail to meet customer expectations.

Measuring CSAT for AI isn’t about checking a compliance box. It’s about creating a fuller view of experience, one that includes emotion, effort, and trust. And there’s no single playbook for how to do that. Some organizations are experimenting with post-interaction surveys specific to AI. Others are exploring conversation analytics, open-text insights, and sentiment data as proxies for satisfaction.

The takeaway? Quality can be measured in many ways. What matters most is having a system in place that allows you to listen, learn, and iterate.

Legacy metrics like CSAT and net promoter score (NPS) were built for a different era, one where human interactions dominated and surveys captured the bulk of feedback. But as AI takes over routine tasks and reshapes the contact mix, these tools are starting to show their limitations. According to CX Today,1 analysts are calling for a new generation of metrics.

Predictive tools like expected NPS (xNPS) allow AI to estimate how satisfied a customer likely is, even without a survey response. Platforms like Salesforce are rolling out “Customer Success Scores” to flag where friction exists and where teams can take action before the customer even complains. It’s a shift from static scoring to dynamic insight, and it reflects a broader truth: you can’t optimize what you’re not measuring, and you can’t measure AI the same way you measure a person.

Why Measurement Matters, Even If It Looks Different

Customer expectations around AI are rising. In 2023, many customers were curious. In 2025, they’re discerning. They know when they’re interacting with AI, and they have a sense of what “good” should feel like, whether that means speed, clarity, or a graceful escalation to a human. This evolution creates both opportunity and pressure. If AI is the first point of contact, then it’s also the first impression. Measuring the experience isn’t just about accountability. It’s about influence and understanding how AI shapes perceptions of your brand.

Some brands now apply CSAT in the same way across all channels, including AI. Others take a more differentiated approach. What’s consistent across high-performing organizations is a commitment to insight: finding ways to hear the customer, however the interaction unfolds.

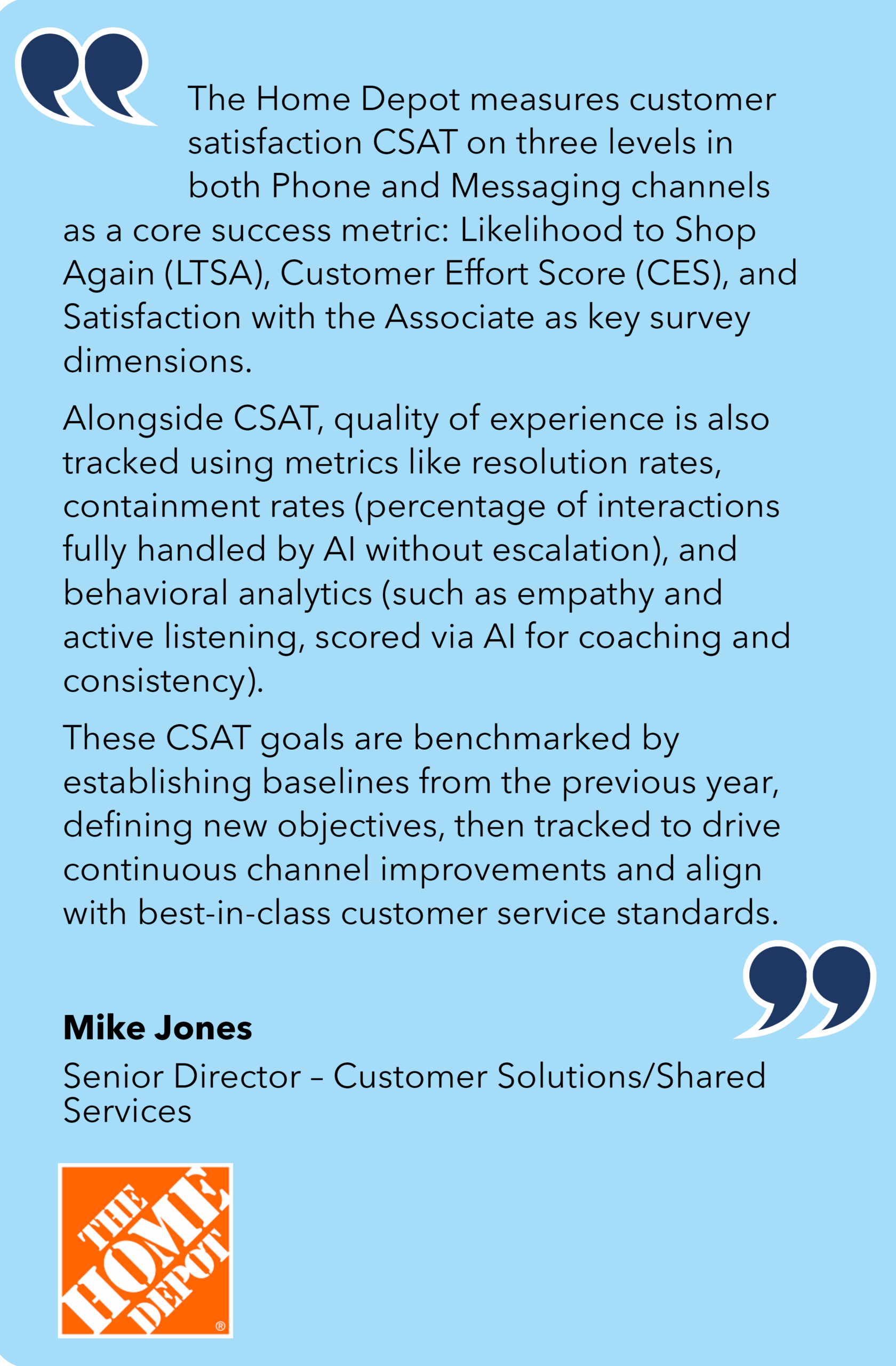

When AI experiences are implemented without considering customer perception or measuring satisfaction, the consequences can be swift and severe. Take Snapchat’s 2023 launch of My AI, a generative chatbot pinned to the top of users’ chat feeds. Rather than delighting users, the unremovable feature sparked backlash, with many describing it as invasive or unsettling. App store ratings plummeted, and searches for “delete Snapchat” surged by nearly 500% in the months following the release.

The problem wasn’t just the presence of AI; it was the absence of customer insight guiding the implementation. As Harvard Business Review2 points out, assuming AI will automatically add value without listening to users can erode brand trust and trigger reputational damage. Measuring how AI is experienced is essential not just for optimization but for risk mitigation.

From the Frontlines: AI Satisfaction and the Experience Gap

If AI is becoming the face of your brand, then your customers’ satisfaction with AI deserves the same rigor as any other channel. But the data from our 2025 CX Leaders Trends & Insights: Consumer Edition report3 in partnership with Transcom shows a disjoint. In 2025, 78% of consumers reported using self-help tools — a jump from just 55% the year prior. Adoption is up. Expectations are up. And yet, self-help solutions ranked dead last in consumer perception of experience quality, even trailing behind social media support.

If AI is becoming the face of your brand, then your customers’ satisfaction with AI deserves the same rigor as any other channel. But the data from our 2025 CX Leaders Trends & Insights: Consumer Edition report3 in partnership with Transcom shows a disjoint. In 2025, 78% of consumers reported using self-help tools — a jump from just 55% the year prior. Adoption is up. Expectations are up. And yet, self-help solutions ranked dead last in consumer perception of experience quality, even trailing behind social media support.

The misalignment between usage and satisfaction highlights a growing “Experience Gap,” a term the report uses to capture the widening divide between what consumers expect from AI-driven CX and what they actually get. Most troubling? When self-service fails, it doesn’t just frustrate; it drives churn. Nearly half of consumers say they’ve stopped or slowed doing business with a company due to poor customer support, with difficulty reaching a human being topping the list of frustrations.

The lesson here isn’t to pull back from AI. It’s to measure its impact with the same precision as human-led channels.

This is a Strategic Conversation

This is a Strategic Conversation

Leaders know that metrics aren’t just numbers; they’re signals. When we measure something, we’re saying it matters. Customer satisfaction for AI isn’t a niche metric. It’s a lens into how automation is serving your customers, supporting your people, and advancing your strategy.

This shift calls for alignment across teams. Technology, CX, and operations leaders must collaborate to define what success looks like for AI and how it will be evaluated. And that definition may evolve. A single score won’t capture everything, but a thoughtful mix of CSAT, sentiment, effort, and trust metrics can provide a more complete view.

Questions Worth Asking

As the conversation around AI satisfaction gains momentum, these questions may help spark productive dialogue within your organization:

- Are we measuring CSAT (or other satisfaction metrics) for AI interactions separately from human ones?

- How do we currently define success for our AI experiences, and does that definition include the customer’s voice?

- Do our measurement tools capture resolution, emotion, and ease?

- Are we tracking satisfaction trends over time and across channels?

- How does feedback from AI experiences flow into our governance, product, and training teams?

These are not just tactical questions. They are leadership questions. And the answers will shape not only how AI is used, but how it’s experienced.

The Role of the Back Office in AI Satisfaction

The Role of the Back Office in AI Satisfaction

AI experiences don’t operate in a vacuum. Behind every virtual assistant and intelligent bot lies a maze of back-office processes, including escalations, ticketing systems, and fulfillment workflows that can either reinforce or erode customer trust. Yet, most companies still measure AI in isolation, overlooking the broader operational ecosystem that makes or breaks the customer journey.

In our CX Without Silos: Bridging Front- and Back-Office Operations to Elevate CX report4 in partnership with NiCE, while 63% of CX leaders rated their back-office performance as “Good” or “Very Good,” nearly half still report underinvestment in this area. And only a third are satisfied with the tech supporting it. The takeaway? You can have the flashiest AI interface on the market, but if the workflow behind it is broken or disconnected, CSAT will suffer.

Leaders serious about measuring and improving AI satisfaction must go beyond the UI layer. They should ask: Did our AI escalate appropriately? Was fulfillment triggered in a timely manner? Did the sentiment data surface to the back-office team in a way that informed resolution?

These are the seams where most CX breaks down, and the places where AI satisfaction can be meaningfully improved.

A More Complete Picture of Experience

Customer satisfaction is not the only way to measure quality, but it’s a familiar, scalable starting point. Layering in other measures such as effort scores, trust indicators, and emotion analysis can help organizations evolve beyond a transactional view of satisfaction.

Ultimately, the goal isn’t to hold AI to the same standards as human service; it’s to hold it to the same level of care. As AI becomes more embedded in the customer journey, leaders have a unique opportunity to redefine what experience means not just for efficiency’s sake, but for empathy, clarity, and loyalty.

The next chapter of CX will be shaped not just by how well AI performs, but by how well it is understood. And that starts with asking, not assuming, how customers feel.

Article Links

- https://www.cxtoday.com/workforce-engagement-management/legacy-contact-center-metrics-are-on-the-way-out-heres-what-will-replace-them/

- https://hbr.org/2025/09/make-sure-your-ai-strategy-actually-creates-value

- https://execsintheknow.com/2025-cx-leaders-trends-and-insights-consumer-edition/

- https://execsintheknow.com/cx-without-silos-bridging-front-and-back-office-operations-to-elevate-cx/

Customer Experience Is Becoming Intelligent: What Leaders Need to Know NowArtificial intelligence is rapidly becoming the intelligence layer within modern customer experience operations. The most impactful organizations are amplifying human capability through cooperative intelligence—embedding AI across the full CX lifecycle to improve efficiency, reduce risk, and unlock entirely new levels of service. In this session, Mark Vange, CTO and Founder of Autom8ly, will share how AI is transforming CX through capabilities including autonomous agents, real-time agent assist, intelligent transcription, automated QA and compliance monitoring, and proactive operational alerting. These systems continuously observe, learn, and adapt from customer interactions—helping organizations improve agent performance, detect fraud and operational waste earlier, and ensure consistent service quality at scale. Critically, these capabilities are now operating successfully in highly regulated environments such as healthcare and financial services, where compliance, auditability, data protection, and operational reliability are non-negotiable. Mark will explore how ROI CX is leveraging this intelligence to enhance service delivery in these demanding environments—empowering agents with real-time insight while strengthening compliance, resilience, and operational control. You’ll learn:

Thanks to Our Partners

|

Beyond Automation: Social CX in the Age of AIAs customer service continues its shift toward social and asynchronous channels, AI is fundamentally changing how brands engage, respond, and scale. Traditional voice and chat are no longer the primary focus; instead, AI is quietly streamlining these channels while social media becomes the front line for service, community building, and brand growth. With rising expectations for responsiveness across earned and owned channels, CX leaders are navigating increased social volume alongside limited, high-risk AI options that can easily feel inauthentic to highly digital consumers. In this session, Chris Wallace, SVP Global Growth at IntouchCX, along with Laura Wheeler, Director of Social Media Customer Care at Audible, examine what this shift means once efficiency is no longer the differentiator. The conversation reframes AI beyond productivity, focusing on the evolving value of CX, demographic nuance by brand, and why human connection remains critical, while also including an interactive breakout session where attendees discuss real-world social CX scenarios and how to apply AI without sacrificing authenticity. Key Takeaways

Thanks to Our Partner

|

Stop Guessing: Take Control of the CX Buying ProcessThe CX technology landscape is expanding at a dizzying pace. With the rapid influx of Voice AI, CCaaS, and agentic tools, distinguishing between “market noise” and “strategic necessity” has never been harder. The biggest hurdle for enterprise leaders isn’t just implementing technology, it’s buying it. Join Simplify and Lindsey Ellison, SVP of Business Technology at Globe Life, for an interactive workshop exploring the complexities of procuring technology for their 13,000+ agent organization. Lindsey will share Globe Life’s journey, identifying strategic market shifts and defining the “why” behind their transformation toward modern CCaas and Agentic AI. Participants will:

Thanks to Our Partner:

|

Where CX Strategy Breaks Through: Designing Ideal-State Journeys for Speed, Loyalty & Operational EfficiencyCustomer journeys often break in predictable places, and those breaks cost you time, money, and loyalty. In this Tech Forum session, we’ll pinpoint where friction hides, show how AI eliminates redundant work, and introduce a journey management model that simplifies experiences without sacrificing empathy. Attendees will walk away with a practical framework and action plan to accelerate resolution, reduce operational costs, and deliver more consistent, intelligent customer experiences. Key Takeaways:

Thanks to Our Partner

|

Your Customer Already Told Us: Building Memory into the Agent EcosystemEvery call transfer is a failure point. Every “can you repeat that?” burns time and trust. Most contact centers respond by adding more AI, which makes the problem worse. Without orchestration, you get conflicting responses, lost context, and agents cleaning up messes they didn’t create. Roger Lee shows what happens when you stop stacking AI tools and start coordinating them. Drawing on examples from organizations that cut contacts by 50% while improving quality, he explains how to design clear boundaries for when AI should act, collaborate, or escalate. Over the next few years, conversation will become the primary interface for getting work done. AI prepares and coordinates tasks before humans step in to handle judgment calls and complex decisions. You’ll learn:

Thanks to Our Partner

|

Leading Execution in the Age of AI and Agentic SystemsWhen AI starts acting on behalf of your team, who is accountable? Customer experience is entering a new phase. AI is no longer limited to chatbots, and copilots’ organizations are now experimenting with agentic AI systems that can take action, make decisions, and orchestrate tasks across workflows. While this shift expands what’s possible, it also raises a critical leadership challenge: how do you move toward greater automation and intelligence without compromising accuracy, trust, and the human quality of care? In this Leader’s Choice session, Sarah brings a perspective shaped by her work at Procedureflow to guide a conversation on a key industry shift from AI assistance to AI action and why this makes operational clarity more important than ever. Participants will explore the realities leaders are facing today: • Chatbots handling first contact but lacking process awareness The discussion will focus on how leading organizations are building a foundation where AI, copilots, and agentic systems operate within structured knowledge, governance, and guided workflows. This ensures frontline teams remain confident; customers receive consistent care, and automation enhances rather than undermines trust. Before the session begins, attendees will note their top questions and challenges with AI-driven execution, which will shape the discussion. These real-world inputs will drive a hands-on group exercise where participants identify care gaps, craft opportunity statements, and map the foundations needed for safe, consistent AI-powered execution. Attendees will leave with a leadership perspective on designing CX environments where intelligence, autonomy, and human judgment work together delivering faster, more personalized service without compromise. Thanks to Our Partner

|

See AI Transform Skill Development LIVE — How Your Peers are Slashing Ramp Time & Accelerating UpskillingFrontline performance is the single biggest driver of customer experience. But traditional training doesn’t scale, doesn’t stick, and rarely prepares teams for real-world customer interactions. In this high-impact session, CX Learning and Operations expert Casey Denby reveals how leading enterprise brands—including Chase, Marriott, State Farm, Comcast, JetBlue, Nordstrom, and MLB—are using AI-powered, lifelike simulations to accelerate skill development and deliver measurable CX results, fast. Casey breaks down the science behind how people actually learn, and shows how AI-driven practice environments dramatically improve retention, confidence, and performance—without adding operational complexity. You won’t just hear how it works. You’ll experience it. In this live, interactive session, you’ll:

Thanks to Our Partner:

|

How Urban Outfitters Cracked the Code on AI AgentsLaunching AI at enterprise scale is never simple, especially across beloved global brands with distinct voices, loyal customers, and complex operational structures. But that’s exactly the challenge Joannah Holmes, Director of Customer Service at URBN, set out to solve. In this mainstage case study, Joannah takes attendees behind the scenes of an ambitious AI agent rollout in modern retail CX. Over a two-week sprint, URBN deployed agentic AI across four marquee brands: Anthropologie, Free People, Urban Outfitters, and Terrain. This session will explore the real story behind the launch: the strategy, the cross-functional alignment, the unexpected hurdles, and the breakthrough moments that made it possible. Joannah will share how her team ensured the AI agents not only mirrored each brand’s identity but also unlocked measurable operational gains to improve speed, accuracy, agent workload, and customer satisfaction. Most importantly, she’ll discuss what she’s learned about responsibly scaling agentic AI inside a global retail ecosystem, and what’s coming next for URBN. Attendees will walk away with a rare, inside look at enterprise AI transformation in motion, including what it really takes, what’s required to build trust, and how to set a foundation for sustainable, scalable impact. Thanks to Our Partner:

|

How Tory Burch and Vuori Implemented Phone AI in 2025Tory Burch herself singled out the CX team at their end-of-year companywide all-hands for the brand’s most successful AI work of 2025. Vuori started their implementation weeks before Black Friday, had time to spare, and flipped skeptical leaders just one quarter post-launch. The question is how. Flip is going behind the scenes with the changemakers who made bold bets, learned fast, and won big. We’ll start at the beginning, when there was little more than ideas, and travel with them through time as they became market experts, pivoted from failed pilots, drove stakeholder alignment, forecasted ROI, and ultimately transformed some of the most iconic American brands. In this session, learn the real-life twists and turns, tradeoffs, hard calls, and big ‘aha’ moments — and how can you use their experience to shortcut your own learnings as you eye bigger, bolder, customer-facing AI deployments in your organization. Key Takeaways:

Thanks to Our Partner:

|

Automation Without Alienation: A People-First Workforce Strategy in the Age of AIThis session shares a real-world transformation story of how a large enterprise contact center moved from early skepticism about automation (“it will replace jobs”) to a people-first strategy that improved both operational performance and employee experience. The speakers will explore the strategic inflection point that led Cigna to adopt real-time automation—and the leadership work required to earn buy-in beyond senior leadership. The discussion will highlight key lessons, measurable outcomes, and how today’s momentum in AI connects to the future of workforce orchestration—illustrating how automation and AI can work together to support scalable, resilient workforce strategies beyond the contact center. Key takeaways for attendees

|

CX Masterclass: A Look at the Ritz-Carlton Culture of ExcellenceExceptional customer experiences don’t happen by chance. They are built through culture, clarity, and disciplined execution. In this masterclass, Amanda Joiner, Global Vice President of The Ritz-Carlton Leadership Center, shares how The Ritz-Carlton has sustained legendary service by empowering employees to truly own the customer experience. The session will explore the core frameworks that guide behavior and decision-making across the organization, including the Credo, Motto, Three Steps of Service, Employee Promise, and the 12 Service Values. Together, these elements establish a shared language for service and a consistent standard for how care shows up in moments that matter. Beyond philosophy, this session will showcase the practical systems that make excellence repeatable: how thoughtful selection, onboarding, recognition, and continuous upskilling reinforce a culture of ownership and enable employees to act with confidence and purpose. Attendees will take away clear, actionable perspectives that elevate service culture across any organization, along with opportunities for peer interaction, guided table discussions, and reflection to help leaders connect the concepts to their own teams and environments.

|

Making CX a C-Suite PriorityCX friction is easy to spot on the front lines and far harder to elevate into an executive priority. Too often, CX leaders come armed with anecdotes or dashboards that resonate locally but fail to translate into enterprise-level urgency. This session explores how leading organizations bridge that gap by pairing a clear narrative with hard data tied to business performance, risk, and customer outcomes, and turning CX pain into an executive ready business case. Through real customer examples, Reddy will examine how a small, focused CX pilot delivered measurable wins that earned CEO attention and sponsorship. Attendees will leave with a practical playbook for translating frontline insight into boardroom language and how to build the internal momentum needed to scale CX initiatives across the enterprise. Thanks to Our Partner:

|

Next Level Learning & Development: The Operational Advantage of Learning Done RightEffortless customer experiences begin with effortless learning—learning that happens inside operations, not alongside them. Next-level Learning and Development blends seamlessly into day-to-day execution, transforming operations into an operational learning environment where learning is strategic, immediate, and continuous. Grounded in cognitive science and adult learning research, these approaches require no new tools or budget, yet deliver immediate, measurable improvements in performance and customer ease. This session challenges the outdated, siloed model where Learning and Development is solely responsible for performance. Instead, it positions performance as a shared operational continuum—owned by leaders, reinforced in the moment, and sustained through science-backed practices. In an era where organizations are racing toward AI without understanding how people actually learn, this conversation returns to the foundations of research, demonstrating how trusted, evidence-based strategies can be applied immediately to maximize performance in dynamic environments. Attendees will learn:

|

Frontline Empowerment: The Heart of CX TransformationTransformation is happening all around us — in technology, AI, and business models — but the real story of customer experience isn’t about systems; it’s about people. In this keynote, Kevin McDorman, Vice President of Customer Care at Southwest Airlines, will share how the frontline isn’t just executing service — it is the strategy for creating seamless, friction-free experiences. Through real stories from Southwest, Kevin will show how empowering frontline teams to act with judgment, empathy, and autonomy drives measurable improvements across every metric. Attendees will see how putting people first — not scripts, dashboards, or automation alone — creates solutions in the moments that matter most, transforms policies into guardrails, and positions technology as a partner, not a replacement. This session challenges leaders to rethink CX strategy: how to anticipate customer needs, remove friction, and give teams the authority to deliver results. Attendees will leave inspired to view the frontline not just as execution, but as the engine of enterprise-wide customer experience. About Kevin Kevin McDorman serves as Vice President of Customer CARE at Southwest Airlines, assuming the role on May 12, 2025. He leads a hybrid workforce of more than 3,500 Employees across the continental U.S., overseeing all Customer-initiated service channels—including phone, email, and social platforms—as well as proactive customer communications, travel disruption strategy and execution, and Southwest’s partnership with the U.S. Department of Transportation. Under Kevin’s leadership, Customer CARE supports more than 140 million Customers traveling through 117 airports across 11 countries, managing over 20 million Customer contacts anually. His organization plays a critical role in delivering the legendary hospitality that defines the Southwest brand—whether helping Customers navigate irregular operations, providing real-time digital communications during travel disruptions, or resolving complex issues with empathy and expertise. Kevin is focused on modernizing the end-to-end Customer journey by integrating digital, operational and voice-of-customer insights; strengthening alignment with departments across the Company; and enabling a consistent Customer support model that reflects Southwest’s culture of service and connection. Prior to joining Southwest, Kevin built a distinguished 22-year career at AT&T, where he held senior roles spanning customer service, call center operations, finance, vendor management, and strategic development. He led organizations supporting more than $100 billion in revenue, oversaw international operations in 16 countries across 12 global outsourcing partners, and delivered a call-center transformation that generated $700 million in operating-expense savings while reducing customer contacts by 32% and lowering cost-to-serve per subscriber by 18% year-over- year. He has a history of delivering best-in-class customer service by leveraging groundbreaking innovation in Big Data, Gen AI, Robotics, and Global Sourcing. Known for his collaborative leadership style, strength in building high- performing teams and ability to connect people, process, and technology, Kevin mentors leaders across functions and drives performance through clarity of vision, purposeful innovation, and customer-centric culture.

|

Exporting Culture: How to Extend Your Brand’s Soul Into an Outsourced CX ModelEvery brand has a culture—few know how to export it. As more companies blend internal CX teams with outsourced BPO partners, the central challenge becomes ensuring that customers experience the same authenticity, empathy, and values no matter who answers the call. In this interactive workshop, GTCX CEO Bill Colton and Azure Standard’s CX leader Karen Slusher unpack how a brand’s “internal DNA” can be successfully extended beyond its four walls. Azure Standard, with its uniquely passionate customer following and deeply relationship-driven approach, has cultivated a CX model where agents are empowered to spend real time with customers, prioritize connection over speed, and build long-term trust. Translating that ethos to an external partner might seem impossible—yet Azure and GTCX have built a blueprint for doing exactly that. Attendees will learn:

This session blends strategic insight with practical guidance from two leaders who have done it in the real world—not in theory. Thanks to Our Partner:

|

The Standard Is the StrategyCX excellence isn’t created by software, scripts, or perfectly defined processes. It’s created by leaders, and the standard they’re willing to set for themselves. In this keynote, Travis Brown, former NFL quarterback and current pastor, delivers a direct challenge to CX executives: your team’s performance will never exceed the standard you personally embody. Travis explores the uncomfortable but liberating truth that success can often mask slipping standards, and teams will always follow the tone the leader sets, especially when conditions aren’t ideal, pressure is high, or you’re leading teams you didn’t personally handpick. This session will reshape how you think about standards, how they’re created, reinforced, and multiplied across the enterprise, and why EX will always dictate CX. Through powerful stories and practical leadership insight, Travis helps leaders see that the most scalable strategy for CX excellence is not a system… it’s a standard. And it starts with you. About Travis: Travis Brown is a former NFL quarterback who played for 6 seasons (Seahawks, Bills, Colts). He now channels his passion for leadership, teamwork, and culture into developing others. He oversees campuses at Christ’s Church of the Valley (CCV)—one of the largest churches in the country with 19 campuses and over 55,000 in attendance every weekend—helping shape environments where teams thrive and people grow. He is also the founder of 180 Sports, a nonprofit dedicated to using sports to impact culture by training coaches, supporting athletes, and providing equipment. Through his experiences on and off the field, Travis has gained a deep understanding of what it takes to build strong teams, cultivate winning cultures, and help individuals reach their full potential. Beyond his professional work, Travis has been married to his wife, Corinna, for nearly 25 years, and together they have five children. His greatest passion is investing in people—whether on the field, in the workplace, or at home—helping them maximize their potential and lead with clarity, resilience, and purpose.

|

Delivering Care Without Compromise: The Human-Tech Partnership at VisaFrom her first job behind a supermarket checkout to leading Visa’s Global Client Care organization, Katie Beaudry has always understood that customer experience begins with empathy. What once required face-to-face presence has evolved into a world where data, AI, and predictive design enable care at scale — yet the heart of CX remains human. In this keynote, Katie will share how Visa is reimagining every stage of the service journey, building “self-healing” experiences and agentic AI systems that empower both customers and care teams to deliver seamless, personalized solutions in real time. But transformation isn’t about technology alone; it’s about foresight, culture, and connection. Katie explores how her teams are blending human intuition with intelligent automation to deliver care without compromise. She’ll challenge leaders to rethink what preemptive service looks like in practice, how to equip teams for success in an AI-powered ecosystem, and how to keep the human touch alive at a global scale. Attendees will walk away inspired to raise the bar, not just by solving customer problems, but by anticipating them. About Katie Katie leads Visa’s Client Care organization, which is part of Client Services. Client Care’s purpose is to provide superior customer support for Visa’s products and services across a full range of client types, from cardholders whom Visa interacts with ‘on behalf of’ banks and credit unions (our revenue-generating managed services departments), to helping merchants, acquirers, fintechs, and other financial institutions directly with their service queries and technical issue resolution. Client Care has more than 1,500 highly skilled team members who support 18 languages, and our award-winning team annually handles 18M customer touchpoints across six channels, including phone, email, live chat, self-service portals, chatbot, and social media. Before joining Visa in February 2022, Katie spent 13 years with HSBC Bank, both in London and New York. Her first role with HSBC started in Corporate Communications, supporting large, multi-year transformation programs for the Finance and Risk functions. She then moved to New York to lead Employee Communications for the US business and progressed to lead the Customer and Employee Experience teams. Later, she ran the US contact center and international banking organizations. Before HSBC, she was a Senior Corporate and Legal Affairs Manager at Tesco in the UK, handling national media and other public relations. She is a co-active coach trained through the Coaches Training Institute and has a joint first-class honors degree in English and Media from Sussex University, UK. She lives in Florida with her husband and has three children. In her spare time, she enjoys traveling, cycling, and eating out.

|

Walmart’s People-Led, Tech-Powered Approach to Customer SupportAs customer and associate support reaches a defining moment, CX leaders are being challenged to evolve faster than ever without losing the human connection that defines great service. In this keynote, Meghan Nicholas, Vice President of Customer Engagement Services at Walmart, will explore how large, complex organizations can modernize their service operations through a people-led, tech-powered approach. Drawing from real-world experience at scale, she will examine how today’s leaders are balancing rising expectations, accelerating technology, and the enduring need for empathy and trust across every customer touchpoint. Attendees will gain perspective on designing service models that are both operationally resilient and deeply human, where innovation enhances, rather than replaces, meaningful connection. With the continued growth of automation, AI, and self-service, this session offers a timely, high-level look at how CX leaders can drive efficiency, clarity, and care simultaneously, setting a new standard for performance without compromise. About Meghan: Meghan Nicholas is a leader in digital transformation, customer experience, and operational strategy. As Vice President of Care Experience and Operations at Walmart, she leads customer and associate contact centers, driving innovations that enhance customer satisfaction while optimizing operations at scale. Meghan is passionate about leveraging technology, data, and AI to streamline processes and eliminate friction in customer interactions. Before joining Walmart, Meghan held leadership roles in supply chain, operations, and analytics at Peloton, Dollar General, and The Home Depot. With deep expertise in operational strategy and customer care, she has led large-scale transformations that drive efficiency, innovation, and business growth in complex, high-volume environments. She holds a Bachelor of Science in Business Administration from Auburn University.

|

CX as a Growth Engine: Proving Value in the BoardroomCustomer experience leaders know that delivering exceptional service drives loyalty, retention, and long-term business value. Yet, securing investment for new tools, talent, and innovations often requires more than intuition or anecdotal wins. Many CX leaders still face challenges in articulating a compelling financial case for investment, translating the less tangible benefits of customer care into metrics that resonate with CFOs, CEOs, and boards. Without a strong internal case, initiatives risk being deprioritized in favor of more easily quantified projects. This panel will dive into proven strategies for building persuasive ROI models that tie customer care excellence directly to revenue growth, reduced churn, and operational efficiency. Panelists will share best practices for quantifying knock-on effects like customer lifetime value, brand advocacy, and competitive differentiation, while also offering guidance on how to educate senior leadership on the true business impact of CX. Attendees will leave with practical tools and storytelling techniques to strengthen their internal advocacy and secure the resources needed to elevate customer experience initiatives. Thanks to Our Partner:

|

Beyond Deployment: The Quality Assurance and Measurement Essentials for AI-Powered CXAs AI reaches new levels of deployment within the contact center, the focus is all too often on speed to market rather than sustained quality and performance. Without rigorous monitoring, these solutions can drift from intended outcomes, generate inconsistent or inaccurate responses, and even erode customer trust. The difference between AI-powered solutions that delight versus ones that frustrate often comes down to how well performance is measured, tuned, re-tuned, and governed over time. This panel will explore why monitoring AI quality must be as much a priority as implementing AI itself. CX leaders will share emerging best practices for establishing performance benchmarks, aligning AI outputs with business and customer goals, and setting up governance models that evolve alongside the technology. Attendees will gain practical insights on how to track both quantitative and qualitative results, run an effective QA layer, detect bias or drift, and create feedback loops that ensure AI remains a reliable extension of the customer experience strategy. During this panel you’ll learn about:

|

CX Livewire: Polling, Predictions, and Instant FeedbackJust as data drives the vital operational adjustments that CX leaders make every day, data also fuels the strategic decisions that power entire programs. During this session, panelists will dive into the data of critical CX topics the goal of contrasting results from recent Execs In The Know’s CX research with live polling of the CRS Amelia Island audience. Real-time insights will help audience members benchmark themselves against the room, while panelists provide headlights into some of the biggest challenges and successes at their own organizations. Come prepared to participate during this high energy, fast-paced session which is sure to produce both surprises and confirmation. Thanks to Our Partner:

|

Beyond Recovery: Shaping a CX Model That Anticipates, Prevents, and DelightsMost service organizations remain focused on reacting to issues after they arise, yet the real opportunity lies in anticipating and preventing problems before customers ever need to reach out. This panel will explore how CX leaders are rethinking their approaches to become more predictive and proactive, leveraging a mix of customer data, journey signals, and emerging AI capabilities to spot friction points early and deliver pre-emptive support. Panelists will discuss both the opportunities and the challenges in shifting from recovery to prevention — from making the internal case for investment in predictive solutions, to building the right organizational support, to ensuring governance and trust remain at the center. Expect practical insights into how companies are both executing and thinking about proactive outreach and empowering agents with “next-best actions,” all while learning from both successes and setbacks. Attendees will leave with a clearer view of how proactive strategies can reduce preventable contacts, elevate satisfaction, and lower costs while strengthening long-term customer relationships. Thanks to Our Partner

|

Uncompromised Leadership — When Self-Respect Becomes PricelessWhen the stakes are high and the pressure is on, real leadership is revealed not by strategy decks or polished statements, but by the courage to act with integrity, even when it costs something. In this intimate discussion, two EITK advisory board members and seasoned senior CX executives share candid stories of when their values were tested under fire. When the easiest choice isn’t the right one, and when standing firm carries personal or professional consequences, this is when true leaders stand firm. We’ll explore how leaders evolve from good intentions to becoming “the one” others can count on: the person who speaks up, takes risks, and acts on their convictions when everyone else stays quiet. The conversation will examine how self-respect becomes the source of leadership courage, fueling trust inside organizations and loyalty among customers. In this session, you’ll learn:

Expect real stories of integrity under pressure—from culture flashpoints to customer-impacting crises—and leave with practical insights on how courage, vulnerability, and personal accountability can transform organizations. Attendees will walk away inspired to embrace self-respect as a leadership operating system that not only protects employees and customers but also creates the foundation for authentic, lasting trust. |

Thanks to Our Partner:

![]()

| Introduction by: |

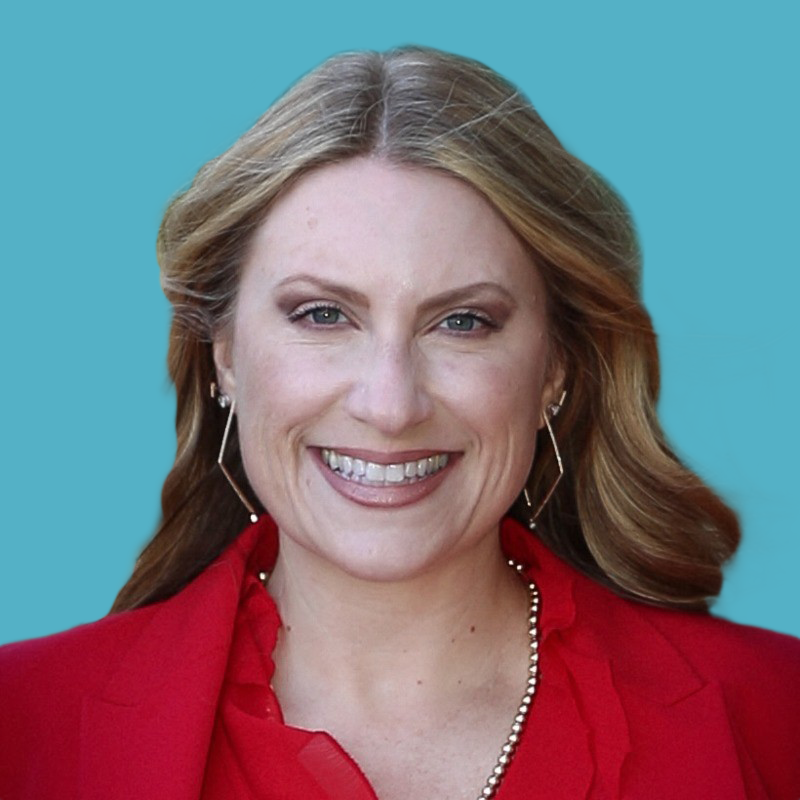

Ruth Rose

Vice President of Strategic Growth  |

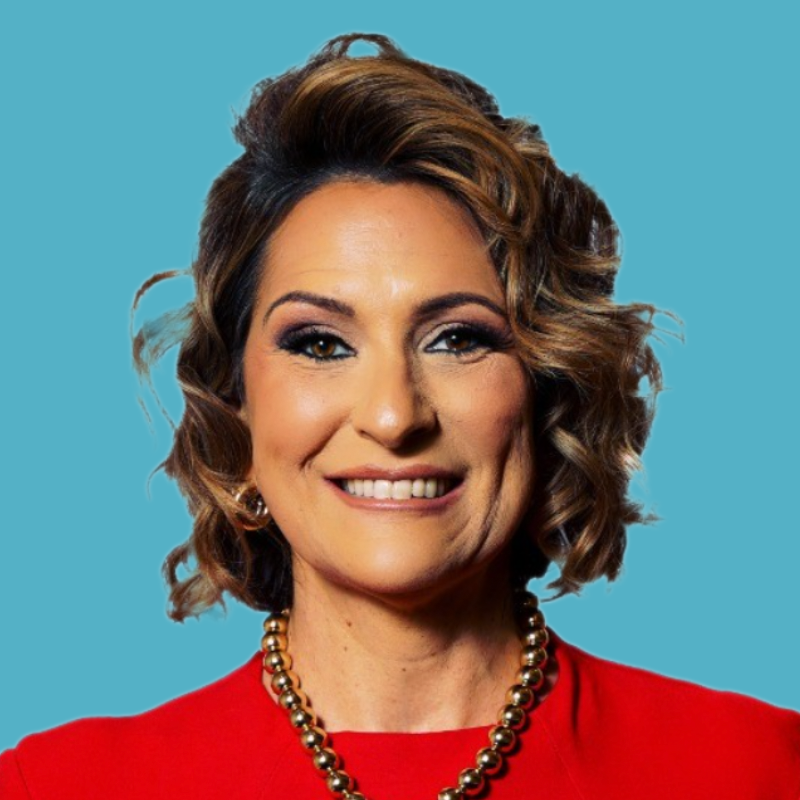

Lisa Oswald

Senior Vice President, Customer Service  |

Carolyne M. Truelove

Vice President, Customer and Operations Excellence  |

|

From Data to Experience: Transforming the Consumer Journey at Michelin Consumer care is evolving, and Michelin is setting a new standard in pairing advanced analytics with human expertise. As a premium brand, Michelin demands a premium consumer experience that anticipates needs and caters to personalized consumer situations. Through innovative technology from IntouchCX and Michelin, agents are empowered with real-time knowledge prompts, surfacing critical information for complex or uncommon scenarios. Combined with AI-driven roleplay and adaptive training, this approach equips agents to operate beyond rigid scripts, balancing autonomy with precision. This also provides the groundwork for better Voice of the Consumer reporting and CRM predictive insights to guide future personalized journeys without overcommunication. Key takeaways:

This session will feature an interactive breakout exercise, giving participants the opportunity to experience the workshop concepts firsthand and apply them in a collaborative setting. Thanks to Our Partner

|

|

How Peloton Fixed Its MSAT Headache Peloton was facing member experience challenges across its global partner network. To fix this problem, Peloton did what any great brand would do: they hit the reset button. The result? A laser-focused turnaround on their new north star metric: MSAT. Join this session to see how Peloton and ibex:

If you want to see how one of the world’s most recognizable brands turned MSAT from a pain point into a proof point, don’t miss this Moment of Brilliance. Thanks to Our Partner

|

|

From Transformation to ROI: Lessons from CX Leaders The contact center is at a turning point—leaders are under pressure to deliver efficiency without eroding the customer experience. In this Shop Talk, Todd Stanley, former COO of Achieve and former senior CX leader at Intuit, will share candid lessons from years of leading large-scale transformations. Drawing on his experience deploying enterprise AI, Todd will discuss how organizations can:

In this session, we’ll explore what’s next for contact centers as AI Agents move from concept to reality, reshaping how routine work is handled and how human expertise is elevated. Leaders will gain perspective on how to prepare their organizations today to capture these opportunities tomorrow. Thanks to Our Partner

|

|

The AI Menu in Action: Reimagining the Customer Journey with AI at Every Phase AI has the potential to transform every touchpoint of the customer journey, but only if leaders know where and how to apply it for measurable impact. In this highly interactive session, you’ll work alongside fellow CX decision-makers to identify critical challenges across the pre-interaction, interaction, and post-interaction phases. Using “The AI Menu” as a framework, you’ll explore real-world examples, from AI Receptionists and Intelligent Virtual Agents that predict customer needs to AI-powered analytics that uncover post-call insights, and co-create targeted, AI-driven strategies to overcome these challenges. You’ll leave this session with actionable opportunity statements, fresh perspectives from your peers, and practical tools to integrate AI across your customer engagement processes. Whether you’re optimizing the moments before a customer ever speaks to an agent, enhancing live interactions with real-time intelligence, or using post-call insights to drive continuous improvement, this session will equip you to design seamless, impactful customer experiences powered by AI at every phase. Thanks to Our Partner |

Glen Tillman

Senior Director Product Marketing |

|

Next-Gen Agent Onboarding: How Reddy and Morgan & Morgan Transformed Training Outdated training tools are failing modern customer service agents—leaving them underprepared, under-coached, and overwhelmed. In this interactive Shop Talk, Reddy invites you to step into the shoes of a newly hired agent navigating legacy onboarding and the daily grind of frontline support. Through hands-on simulation, reflection, and role-play, you’ll uncover the gaps in traditional enablement and see why it’s time to get your LMS truly “Reddy” for your agents. Key Takeaways:

Thanks to Our Partner

|

|

Building Empathetic Voice AI: Architecting Real-Time Conversations at Scale What makes a voice AI experience feel genuinely human? It’s not just about getting the words right. It’s about understanding tone, intent, and emotion in the moment, and responding in a way that builds trust. Behind every seamless voice experience is a complex orchestration of intent recognition, sentiment analysis, and real-time decisioning. This workshop takes you inside the engine of a truly AI-native voice platform where you’ll explore how LLMs, NLU, and proprietary tuning come together to create voice agents that actually feel human. Through a live product demo, you’ll see what’s possible when empathy meets engineering. This workshop will explore:

Thanks to Our Partner

|

|

Pilot with Precision: Aligning Generative Agent Pilots to Risk Tolerance and ROI Launching a generative AI pilot is easy. Launching the right one—one that aligns with your risk tolerance, delivers measurable outcomes, and sets you up to scale—is far more difficult. Presented by ASAPP and PTP, this session will help CX leaders to choose, test, and take generative agent pilots to the next level, with confidence. You’ll explore:

If you’re looking to quickly accelerate your AI journey and maximize ROI—without overpromising or overengineering—this session is for you.

|

|

How AI Is Powering the Next Wave of Omnichannel Customer Service Today’s customers don’t think in channels. They expect fast, personalized support, wherever they are. From chat and social to messaging apps, email, and phone, customer service is now omnichannel. But with this flexibility comes a new challenge: delivering consistent, contextual, and cost-effective service at scale. In this session, Freshworks will explore how AI is transforming customer support across every channel, not by adding more tools, but by making every interaction smarter. Through a live demo and collaborative discussion, they’ll break down how Freddy AI works across chat and email to resolve common queries, empower agents with insights, and simplify tool complexity. You’ll get a practical look at how it all comes together, including:

Attendees will leave with clear strategies for scaling intelligent support without sacrificing the human touch.

Thanks to Our Partner

|

|

TripAdvisor’s Playbook for AI That Gets 5-Star Reviews: How to Avoid the Implementation Mistakes That Break Customer Experience You’ve seen the pattern: AI projects that start strong, then somehow make everything more complicated. What looked like a quick win turns into months of integration headaches, frustrated teams, and executives asking uncomfortable questions about ROI. TripAdvisor made this exact mistake. Then Tim Barker and his team developed a holistic approach that transformed their entire customer support operation—dramatically improving resolution times while reducing costs and boosting agent satisfaction. In this hands-on session, Tim and Laivly’s Matt Bruno will walk you through the same methodology that delivered these results. What You’ll Experience Real-World Case Study Strategic Framework Deep-Dive Interactive Workshop Walk out with your personalized AI workflow map and phased implementation plan.

Thanks to Our Partner

|

|

RingCentral, Inc. (NYSE: RNG) is a leading provider of AI-driven cloud business communications, contact center, video and hybrid event solutions. RingCentral empowers businesses with conversation intelligence, and unlocks rich customer and employee interactions to provide insights and improved business outcomes. For more information, visit: ringcentral.com . |

|

Powered by purpose, TELUS Digital leverages technology, human ingenuity and compassion to fuel remarkable outcomes and create inclusive, thriving communities in the regions where we operate around the world. Guided by our Humanity-in-the-loop principles, we take a responsible approach to the transformational technologies we develop and deploy by proactively considering and addressing the broader impacts of our work. Learn more at: telusdigital.com |

ibex delivers innovative BPO, smart digital marketing, online acquisition technology, and end-to-end customer engagement solutions to help companies acquire, engage and retain customers. ibex leverages its diverse global team and industry-leading technology, including its AI-powered ibex Wave iX solutions suite, to drive superior CX for top brands across retail, e-commerce, healthcare, fintech, utilities and logistics. ibex delivers innovative BPO, smart digital marketing, online acquisition technology, and end-to-end customer engagement solutions to help companies acquire, engage and retain customers. ibex leverages its diverse global team and industry-leading technology, including its AI-powered ibex Wave iX solutions suite, to drive superior CX for top brands across retail, e-commerce, healthcare, fintech, utilities and logistics.For more information, visit: www.ibex.co |

|

Chad McDaniel Chad McDaniel is a well-known advocate for the Customer Management Executive. He works tirelessly to showcase the success of today’s Customer Executive. Execs In The Know believes that advancements in customer success are created when leaders share experiences, outlooks and insights – “Leaders Learning From Leaders.” Our mission is to provide measurable value to the corporate customer executive, by delivering customer experience improvements, in an efficient and effective manner. Execs In The Know connects customer professionals to valuable content, thought leadership, industry insight, peer-to-peer collaboration and networking opportunities. We support the customer professional in a live format by hosting national events (Customer Response Summit) and conducting a series of Executive Think Tanks. |

|

Andy Watson Andy Watson is the Senior Product Marketing Manager for RingCentral’s CX portfolio. Prior to joining RingCentral, he held UCaaS and CCaaS Product Marketing roles at Broadvoice and Mavenir. Additionally, he has extensive telecom experience with Nortel Networks, Ericsson, and GENBAND (now Ribbon Communications), serving in various roles in Technical Documentation, Program Management, and New Product Introduction. |

|

Alex Avery Alex Avery is a product leader at Sierra, where he partners with customer experience teams at innovative brands to deploy AI agents that elevate support operations and drive better customer outcomes. With a focus on practical AI integration, Alex helps companies accelerate automation without compromising on service quality. His recent work includes leading successful deployments for brands like Minted and Pendulum, where Sierra’s agents now handle a wide range of customer interactions across chat, email, and voice channels. Prior to Sierra, Alex cofounded Mainstreet AI, a product development firm which helps companies accelerate their adoption of AI, and Gather, a software platform for museums and universities to manage their member experience. |

|

Flip It Live: How Belk Launched Voice AI in Record Time Why talk about launching AI when you can watch it be deployed in minutes? In this interactive session, you’ll see a real retailer, Belk, set up Flip’s Voice AI from scratch to fully operational in minutes, proving what most vendors call “impossible.” Belk’s Senior Director of Customer Experience, Jessica Patel, joins Flip’s Co-Founder and Chief Revenue Officer, Sam Krut, and Vice President of Demand & Partnerships, Shawn Li, to share how the 137-year-old retailer replaced its IVR with a modern Voice AI experience and went from first conversation to launch in only 74 days. They’ll reveal early results from successfully putting customer-facing AI on phones without a long, custom, expensive build, and the early results Belk is seeing in its most important channel. Join this session for laughs, learnings, and a guide for how your brand can launch Voice AI, too. You’ll see and hear:

Watch a brand “flip the script” on phone-channel CX and walk away with a practical roadmap to launch Voice AI.

Thanks to Our Partner

|

|

Unlocking Call Center Revenue: How Princess Cruises is Driving Growth Most call center agents spend more time figuring out who to call or what to say than actually talking to guests. Princess Cruises is flipping that model — using AI and real-time data to put the right leads, the right insights, and the right timing directly into agents’ hands, making their jobs easier and more rewarding. Join Alvin Stokes, VP of Global Reservation and Guest Services at Princess Cruises, and Scott Sahadi, CEO of harpin AI, as they reveal how new tools are helping agents close more bookings with less guesswork. You’ll learn how Princess Cruises is:

This isn’t about more calls. It’s about smarter calls that convert — faster. If you want to see what significant MoM revenue growth looks like in action, don’t miss this.

|

|

Transforming Your Contact Center into a Modern Resolution Engine For too long, themes in CX have been focused on deflection, asking, “How many customers can we avoid?” The more meaningful question is: “How can we resolve their issues?” Customers don’t care how many steps you eliminated in your workflow or how much faster your team completed their post-call dispositions. They care about one thing: Was my problem solved quickly and effectively? As CCaaS continues to evolve, contact-center leaders must meet ever-higher demands for efficiency, personalization, and seamless omnichannel service. Teams can no longer tolerate half-baked channels, siloed agents, or reporting nightmares. Today, they’re embracing AI-powered, unified workspaces that weave rich contextual data with intelligent, empathy-driven tools so every interaction becomes fast, personal, and on-brand at scale. In this session led by Zendesk, you’ll explore real-world examples of contact centers around the world that have:

You’ll walk away with actionable strategies to transform your contact center into a modern resolution engine by:

Thanks to Our Partner

|

|

Designing the Modern Contact Center Experience: Human + AI Collaboration, Omnichannel Orchestration, and Voice Platform Transformation As customer expectations evolve and AI continues to reshape service delivery, contact centers must transform into agile, intelligent experience hubs. This forward-looking workshop explores how to design the modern contact center by integrating the best of human empathy, agentic AI, and omnichannel strategy. Participants will dive into how to orchestrate seamless journeys across voice, chat, email, and social, creating consistent experiences regardless of channel. We’ll explore how to modernize legacy systems and elevate voice platforms through next-generation IVR (NGIVR) and agentic AI that empowers agents and streamlines customer interactions. A key focus will be on Human + AI collaboration: learning how to optimize handoffs between bots and humans, design emotionally intelligent experiences, and deploy next-gen agent assist tools that elevate frontline performance. Through practical frameworks, real-world case studies, and collaborative exercises, attendees will leave with a better understanding of:

A must-attend session for contact center leaders focused on delivering measurable, strategic value through every customer interaction. Thanks to Our Partner

|

Sierra is the platform that helps businesses build better, more human customer experiences with AI. Founded by industry veterans Bret Taylor and Clay Bavor, Sierra enables companies to deploy autonomous AI agents that provide fast, personalized, always-on support across channels. Trusted by brands like SiriusXM, Sonos, and ADT, Sierra’s flexible model meets customers where they are—offering everything from enterprise-ready, fully supported deployments to build-your-own no-code journeys. Both CX and Engineering teams can move fast, choosing the right approach to fit their evolving needs. For more information, visit: sierra.ai |

|

The Human Advantage: Why People Still Power Great CX in the Age of AI In an era dominated by AI and automation, it’s easy to lose sight of what’s most critical in delivering standout customer experiences: the people making them happen. Amid the tech-driven transformation of CX, one truth remains—companies that thrive are those where agents, and the teams supporting them, are engaged, empowered, and equipped to deliver at a high level. In this dynamic session, Terri DeMent, Director, Consumer Engagement at Nestlé Purina—and Amy Bouthilet, VP of Global Talent at Alta Resources, a leading end-to-end CX BPO, discuss what it truly takes to build and sustain an employee-first culture. Drawing from real-world examples, they’ll share the challenges and breakthroughs of scaling engagement across complex enterprises, and how placing people at the core of CX operations leads to measurable impact—from improved customer satisfaction scores to stronger revenue performance. With a 9-year partnership with Gallup, Alta’s proven approach to employee engagement and leadership development has redefined how to embed culture into the everyday fabric of CX delivery. Attendees will gain actionable strategies to overcome barriers, develop engaged teams, and elevate both employee and customer outcomes in a world increasingly shaped by technology. Key Takeaways:

Join us to discover why, amid rapid technological change, people still drive CX success.

|

|

A Masterclass in CX Technology Sourcing Choosing the right customer experience (CX) technology partners isn’t just a procurement decision—it’s a strategic one. From discovery to delivery, the way you source, evaluate, and manage technology solution providers can make or break your ability to deliver seamless, high-impact customer experiences. In this expert-led panel, seasoned CX leaders will guide you on techniques to find and evaluate the best providers to deliver on your business requirements. You’ll hear real-world examples and gain practical tools for maximizing value from your technology partnerships while staying agile in a fast-evolving digital landscape.

Whether you’re looking to optimize current vendors or source new technology, this panel will offer a blueprint for smarter, more strategic CX technology decisions.

|

|

Reality Check: Are AI & Automation Living Up to Their Promise in CX AI and automation are no longer emerging tools; they’re embedded in the DNA of today’s CX strategies. From transforming contact centers to driving operational efficiency and delivering more seamless customer interactions, they’ve promised smarter, more seamless customer experiences. But are they delivering? This panel takes a clear-eyed look at what’s really happening after implementation. What gains have proven sustainable? Where are expectations falling short? And how are CX leaders rethinking their approach as they face the hard truths of integration, training, measurement, and impact? You’ll hear from leading brands on navigating the evolving balance between humans and machines, including how they’re redefining agent roles, capturing the right metrics, and translating the voice of the customer into iterative, AI-fueled improvements. Whether you’re scaling automation or just beginning to integrate AI pilots, this session offers grounded insights, honest lessons, and forward-looking guidance for building the intelligent contact center of tomorrow. Thanks to Our Partner

|

|

CX Livewire: Polling, Predictions, and Instant Feedback This fast-paced session brings together a panel of CX leaders who are deeply immersed in the challenges and opportunities facing the industry today. Drawing on their diverse backgrounds and day-to-day leadership, panelists will explore what’s driving progress, what’s standing in the way, and how leading organizations are turning insight into impact. Attendees will walk away with a clearer view of the CX landscape, real-time benchmarking from peer responses, and fresh strategies they can apply within their own teams. By pairing live polling with Execs In The Know’s latest research report’s core findings, we’ll use the session to test predictions, validate (or challenge) existing data points, and capture what’s changed in real-time. This approach gives attendees a rare opportunity to see how their organization stacks up, where their peers are headed, and what strategies are gaining traction across industries right now.

Thanks to Our Partner |

|

The Talent Shift: Hiring, Training, and Compensation in an AI World Customer expectations aren’t the only thing evolving, so are the people behind every interaction. As AI and automation continue to reshape the contact center, they’re not just enhancing tools and workflows; they’re fundamentally transforming the agent role itself. In this new era, agents are expected to do more than follow a script. They’re problem solvers, brand ambassadors, and emotional first responders—navigating increasingly complex issues that require both high-tech fluency and high-EQ finesse. These changes are pushing organizations to rethink everything: how we recruit and train, how we compensate and support, what skills matter most in the modern CX workforce, and how entirely new roles may emerge. This panel brings together CX leaders to unpack how AI is reshaping the future of work: redefining the skills we hire for, influencing compensation models, and transforming how training, reskilling, and upskilling are delivered and absorbed. As the role of the agent evolves—shifting from repetitive tasks to higher-value, empathy-driven work—companies must balance automation with human augmentation and adopt strategies for building and supporting a workforce prepared to meet the demands of a more complex, tech-integrated customer experience. The future is faster, smarter, and more connected but success depends on leading with intention, investing in emotional intelligence, and designing roles that elevate both technology and humanity.

Thanks to Our Partner |

|

Board Panel: The Signals We’re Missing In a world flooded with dashboards and metrics, the loudest signals often steal the spotlight—while the most powerful insights go unheard. This panel explores what it means to truly listen to the silent majority of customers: those whose feedback isn’t captured in traditional surveys, whose experiences are filtered through cultural bias, or whose voices are lost in outdated listening systems. Join the EITK Advisory Board as they challenge the current state of customer listening by asking hard questions:

We’ll also explore how AI-powered sentiment detection can help uncover unspoken truths and emerging emotions—if applied with intention and ethics. From tuning into passive signals to reshaping how and where we listen, this conversation will open new possibilities for creating a listening culture that is inclusive, intelligent, and alive. If we want to meet customers where they are, we must hear even what they don’t say. The future of customer experience depends not just on collecting more data—but on listening differently.

|

Incorporating various technologies and working alongside outsourcers produces the best outcomes when alignments are in place and all parties are in sync. Without these synergies, brands risk inefficiencies across their operations. This session will explore how Ulta, in collaboration with IntouchCX, leverages innovation to shape its technology roadmap, enhance insights and analytics, and optimize workforce planning. The discussion will focus on how strategic sprints, integrated solutions and automation can drive efficiencies. This session will highlight real examples, showcasing how transparency, collaboration, and automated workflows have fostered positive change.

The modern CX landscape is rapidly evolving, driven by the convergence of emerging technologies such as AI, advanced data analytics, and human emotional intelligence (EI). This “AI-Human Alliance” represents a transformative opportunity for organizations to deliver exceptional CX at scale — personalized, accurate, compassionate and profitable.

This is an interactive workshop where we’ll explore how to unlock the power of human interaction through technology to boost satisfaction, streamline processes, and empower employees to deliver faster, more personalized, and emotionally intelligent interactions.

Key takeaways:

- AI-powered strategies: Optimize processes, enhance customer experiences, and drive efficiency through the AI-Human alliance.

- Empowered workforce: Leverage AI to resolve complex issues, foster customer loyalty, and drive growth through predictive insights, personalization, and process automation.

- Scalable, agile operations: Design a CX model that balances technological innovation with human ingenuity for your organization’s competitive edge.

Join us for this hands-on session that will reshape how you think about AI, operations, and customer service—leaving you with actionable strategies to drive real impact.

Traditional customer research captures moments in time, but customers experience brands over months and years. What if you could continuously capture authentic customer stories as they unfold, revealing hidden pain points and opportunities that surveys and focus groups miss?

This hands-on workshop introduces a transformative approach to customer understanding: creating a living library of real-world experiences that evolves alongside changing customer needs. Through video diaries, longitudinal tracking, and AI-powered analysis, organizations can move beyond point-in-time data to understand the Total Experience in real time.

Through interactive exercises and real-world examples, you’ll learn how to:

- Build rich video repositories capturing authentic customer interactions

- Identify emerging trends and friction points before they appear in traditional metrics

- Transform ethnographic insights into actionable CX improvements

- Use AI to analyze thousands of customer moments and surface meaningful patterns

- Design a continuous customer listening program that reveals unmet needs

- Create feedback loops that drive rapid CX optimization

- Build internal support for ethnographic research approaches

- Connect customer stories to business outcomes to drive revenue growth and retention

Workshop Outcomes:

- Tools for identifying key customer moments to capture and analyze

- Strategies for translating ethnographic insights into measurable CX improvements

- Methods for communicating the business impact of longitudinal research

- Templates for crafting compelling customer stories that drive organizational change

- An action plan for shifting from periodic to continuous customer research

Join us to explore how continuous ethnographic insights can transform your CX strategy and create deeper customer relationships.

The hype around generative AI’s (GenAI) potential to transform customer experience is real. And so is the potential for it to create meaningful lift and impact for businesses. The catch is that it’s not a one-size fits all approach and GenAI is most effective when housed within structured business processes. Join NLX Chief Customer Officer Christian Wagner and Field CTO Josh Schairbaum for a discussion that unpacks how GenAI can deliver the most value for enterprises.

We will explore strategies for how to apply GenAI to customer-facing use cases, strategies for identifying the best use cases to start with, and go under the hood to show attendees how to build a workflow that taps the GenAI within your structured business processes.

You wouldn’t use a hose to build a desk, just as you wouldn’t rely on a shark as an emotional support animal. Having the right tool for the job is crucial—so why do so many companies still rely on PDFs for their knowledge management system?

Join Atlanticus and livepro for an insightful discussion on how one company transitioned to a more effective Knowledge Management System and the transformative impact it had on their operations. We will walk you through their journey, including the challenges faced, key learnings, and measurable results. By the end of this session, you will gain actionable insights into how a premier Knowledge Management Solution can drive efficiency, enhance customer experience, and future-proof your organization.

Key Takeaways:

- Common pitfalls of ineffective knowledge management systems

- Why Atlanticus chose livepro to overcome these challenges

- The measurable impact of livepro’s implementation on efficiency and customer service

- Successes and challenges encountered during the transition

- How integrations with other tools amplified positive outcomes

- The surprising role of a good mascot

- The evolving relationship between AI and Knowledge Management and its impact on the future of customer service

Discover how Mosaicx and Bank OZK have partnered for over four years to continuously modernize their customer experience. In this mini case study session, you’ll learn how Bank OZK transitioned from a legacy IVR system to an AI-powered Intelligent Virtual Agent, now known to customers as Ozzy. Since implementation, Bank OZK has achieved significant improvements in customer interactions, boasting a +4% year-over-year increase in containment and consistently streamlining their operations for both general business and local branches.

Attendees will gain insights into:

- The strategies behind Bank OZK’s successful migration to AI-driven customer interactions.

- How end-to-end call analytics unlock deeper insights into customer interactions to drive greater automation and adoption as well as predictive intent within and beyond Bank OZK’s IVA.

- Real-world lessons on scaling AI capabilities for sustained growth and improved customer satisfaction.

Traditional Learning Management Systems (LMS) have been a cornerstone of employee training for years, but they often fail to keep pace with the fast-changing demands of today’s CX teams. Too often, these programs turn into check-the-box exercises, delivering little value and falling short of creating real impact.

In this session, Reddy’s CEO and Harte Hanks reveal how they reimagined CX training by developing a next-generation program built on AI-driven simulations, continuous learning, and applied skill development. By revamping the curriculum for a Fortune 500 client, they created a transformative solution that not only elevated agent performance but also delivered superior customer outcomes and a better overall agent experience.

Discover how this innovative approach redefined Harte Hanks’ training strategy—reducing ramp time, cutting average handle time (AHT), improving call quality, and empowering agents to excel in dynamic, high-pressure environments.

CX Leaders understand AI’s impact on helping customers, but there are important choices to navigate. Should you invest a lot of time and resources in custom-building a solution, or should you take an afternoon to launch it and help customers immediately? Speed to operation matters, and adopting AI that impacts CX doesn’t have to be a long, complex process.

Flip has powered ninety percent of successful Voice AI deployments by retail eCommerce brands. In this live session, you’ll get hands-on experience as Flip enables a retailer to quickly integrate and deploy Voice AI — in one afternoon. Witness instant automation and implementation, with customer experience improvements from Day 1.

Attendees will:

- Hear Flip’s Voice AI in action as it interacts with a caller and resolves a real-time support issue.

- Learn how Voice AI can enhance customer experience metrics while reducing costs.

- Discuss how Voice AI can transform your phone channel into a revenue-generating asset.

Quality assurance is an important part of every great CX strategy, but reviewing 100% of agent-customer conversations across every channel is an impossible task – until now.

It’s time to take the guesswork out of quality assurance and let AI do the heavy lifting. During this session, you’ll see practical, real-world examples of how automated QA reduces customer churn, saves time and resources, and pinpoints agent training needs, all while boosting service quality and customer satisfaction. You’ll leave with the knowledge and tools needed to leverage automation for a competitive edge, along with real-life stories and data to back it all up.

Key takeaways include:

- How automated QA tools improve the customer and agent experience

- Practical strategies for implementing automated QA

- A look at reporting tools to help you gain insights and review performance trends

- Case studies of organizations already realizing the benefits of automated QA

AI is evolving fast, and CX leaders are under pressure to take action and deliver real results — all while navigating legacy systems, upskilling teams, addressing privacy concerns, and sifting through an overwhelming number of solutions to separate real value from hype. AI initiatives risk losing momentum without a clear, strategic roadmap before they create meaningful impact. In this interactive session, you’ll walk away with actionable strategies to define, fund, and execute an AI-powered CX strategy that drives tangible results in your business. By designing your own AI Success Roadmap aligned with your unique goals, you’ll be equipped to secure stakeholder buy-in and lead transformative CX outcomes.

Join Alvin Stokes, VP of Global Reservation and Service Operations at Princess Cruises, and harpin AI’s CEO, Scott Sahadi, as they reveal how a data-driven transformation reshaped Princess Cruises’ call center operations, improved guest experiences, and unlocked revenue opportunities.

Working together, they realized that prioritizing data quality and enhancing Princess’ data pipelines could significantly boost ROI—an often neglected challenge.

By improving data accuracy, integrating systems, and equipping agents with real-time insights and tools, they transformed these improvements into tangible results: reducing churn, lowering costs, and strengthening customer loyalty through personalized services.

Princess Cruises turned customer data quality from an overlooked challenge into a strategic advantage, enabling a successful omnichannel approach that delivered seamless service across customer touchpoints.

During this session, Alvin and Scott will discuss:

- How to fix fragmented data and start your customer data hydration journey.

- How to leverage observability and data science to investigate data quality issues.

- How to implement key initiatives that reduce costs and drive revenue growth.

- How to use data-driven insights to improve retention, reduce service costs, and enhance guest experiences.

- How to integrate disconnected systems and eliminate inefficiencies while maximizing existing investments in people, processes, and technology.

This transformation gave Princess the visibility it had been missing—turning an invisible problem into one they could track, understand, and resolve. Don’t miss this opportunity to hear from industry leaders about the strategies behind their success.

Join us for an insightful roundtable discussion on Turo’s AI adoption journey and the transformative impact on customer support operations. Jerry Howe, VP of Customer Support at Turo, and Dave Sheridan, VP of Sales & Marketing at Laivly, will share how Turo implemented AI using a structured “walk-run-fly” methodology—starting with AI-powered transcription and insights, scaling into real-time agent guidance, and laying the foundation for automation and quality assurance.

Discover how Turo leveraged AI to scale customer support while maintaining quality, improve agent decision-making, and streamline workflows. Learn how their phased approach enabled a smooth transition from AI-driven insights to automation, driving efficiency and customer satisfaction.

In this discussion, you’ll gain practical tips on:

- Walk – Using AI-powered transcription to capture conversation insights and enhance documentation